ACR Lab’s first multi-year major research project is tentatively titled “Transnational Networks of Cinema and Digital Media in Asia.” This project explores how cinema and digital media industries located in East, South, and Southeast Asia have interacted and influenced each other. Locating Hong Kong as a ‘contact zone’ of the film industries in Asia, this research project draws attention to the industrial networks of directors, actors, authors, technicians, genres and styles, formats, consumers, languages, and capital across trans/inter-regional contexts. ACR Lab, accordingly, will organize an international conferences and a series of virtual and in-person lectures and talks during the first 30-month duration of the CityU start-up grant (2024-2026).

Forthcoming Publications

-

Remapping the Cold War in Asian Cinemas

Edited by Sangjoon Lee (lead editor) and Darlene Espena. Amsterdam University Press (June 2024).

https://www.aup.nl/en/book/9789463727273/remapping-the-cold-war-in-asian-cinemas#:~:text=This%20book%20is%20about%20cinema,height%20of%20the%20Cold%20War.

-

The Routledge Companion to Asian Cinemas

Edited By Zhen Zhang, Debashree Mukherjee, Intan Paramaditha, Sangjoon Lee

Routledge (June 2024)

https://www.routledge.com/The-Routledge-Companion-to-Asian-Cinemas/Zhang-Mukherjee-Paramaditha-Lee/p/book/9781032199405

PROJECTS

Design Strategies for Concurrent Sonification-Visualisation of Geodata

- PI :: PerMagnus Lindborg, PhD, Associate Professor, School of Creative Media, City University of Hong Kong

- Co-I :: Sara Lenzi, PhD, Ikerbasque Research Fellow, University of Deusto, Spain

- Co-I :: Paolo Ciuccarelli, Professor of Design, Northeastern University, Boston, USA

About

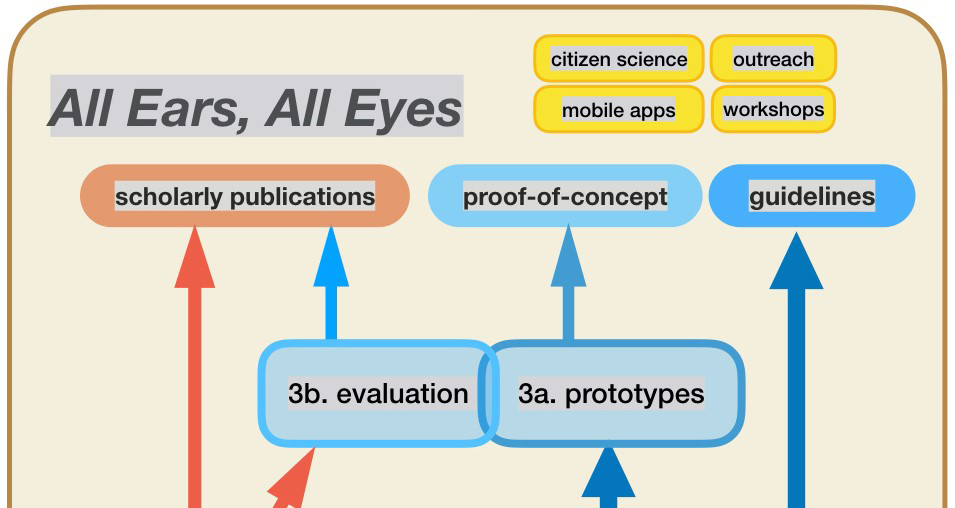

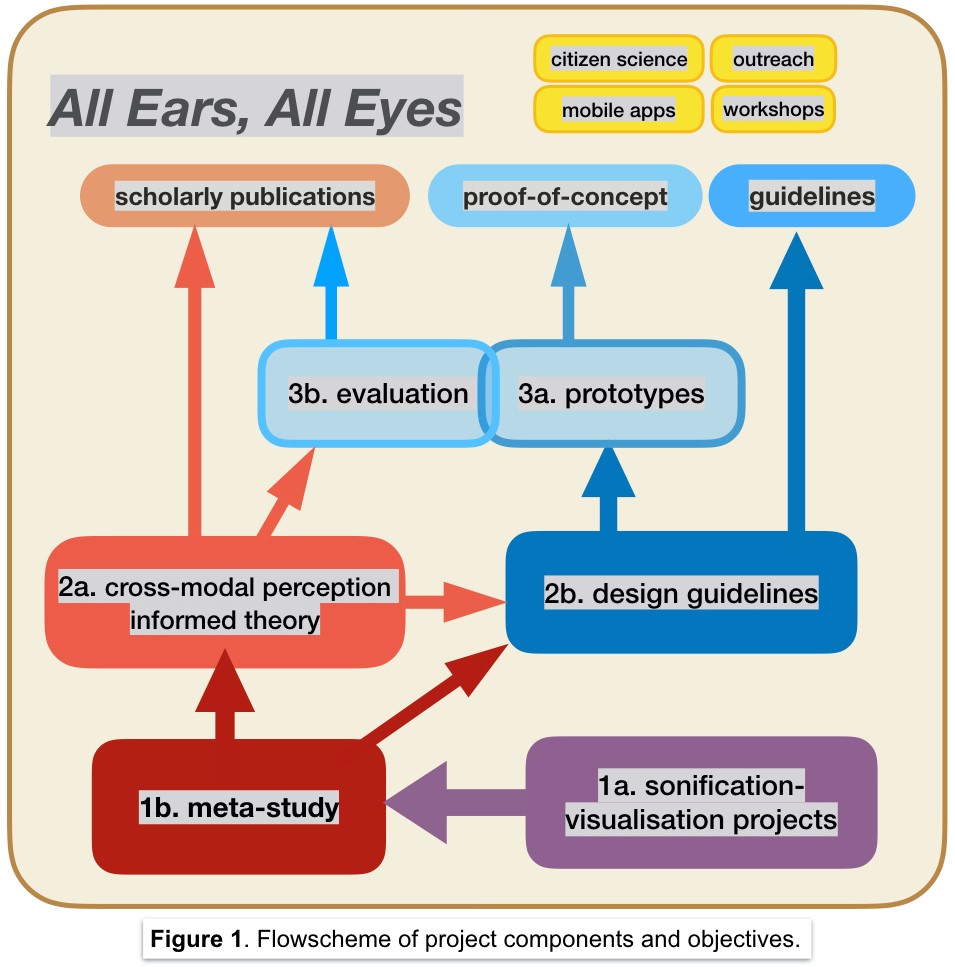

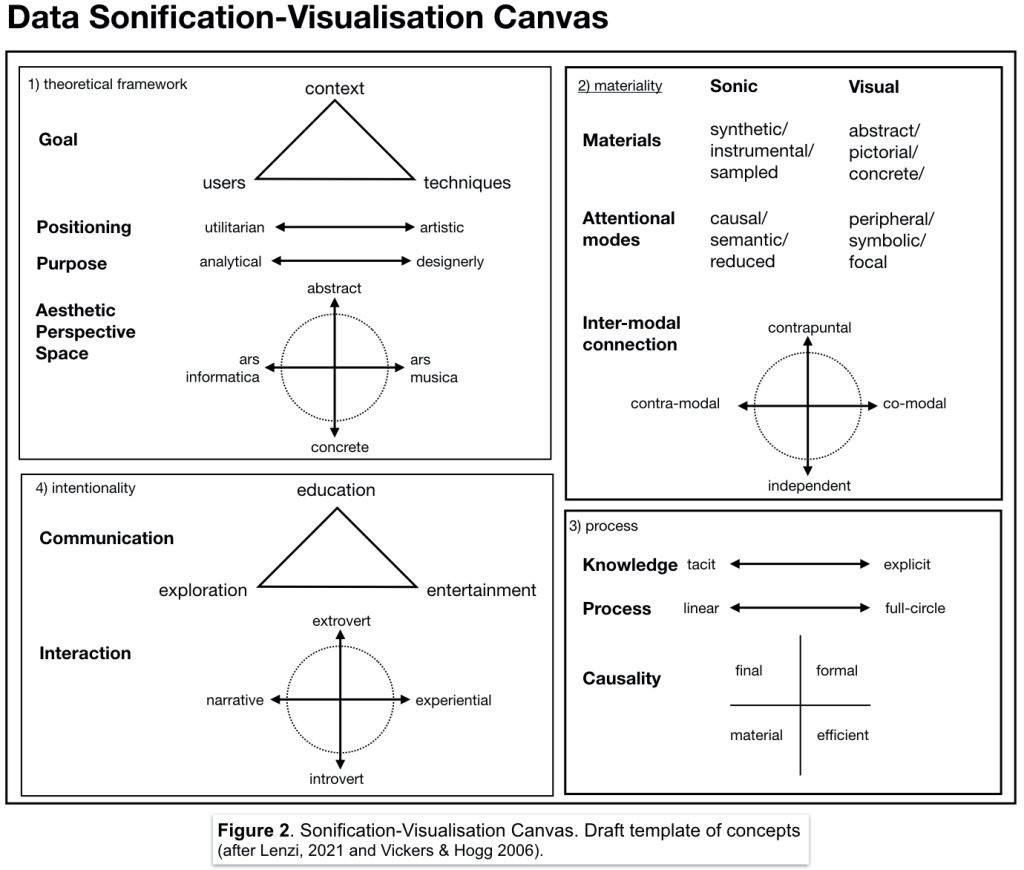

Sonification is the translation of data into sound. Inherently interdisciplinary, the field has seen tremendous development characterised by 1) expanding the definition to embrace aesthetics, via electroacoustic music composition; 2) professionalisation of terminology, techniques, and community-building; and 3) increased attention to visualisation. Time is ripe to focus efforts on the third point. We employ knowledge from dynamic data visualisation to improve on sonification techniques, to generate a cross-modal perception informed theoretical framework, and to determine practicable strategies for concurrent sonification-visualisation design. Project targets are: 1) a set of design guidelines, and 2) a proof-of-concept software system applied to geodata with real-life importance, such as rain and wind, pollution and traffic, forest fires and landslides. People seek to understand their physical environment. Accurate and engaging information design helps both in everyday activities and in making life-choices. Laying the research groundwork for a concurrent sonification-visualisation system for communicating environmental geodata has the potential for real-life applications with broad public appeal and societal impact. Ultimately, the goal of the project is to contribute to the digital fabric of society and improve people’s quality of life.

Funding

Stragegic Research Grant (SRG-Fd), City University of Hong Kong (2023/09–2025/02)

Links

- PerMagnus Lindborg, https://www.scm.cityu.edu.hk/people/lindborg-permagnus

- Sara Lenzi, https://www.saralenzi.com/

- Paolo Ciuccarelli, https://camd.northeastern.edu/faculty/paolo-ciuccarelli/

- Data Sonification Archive, http://sonification.design

- Repository on GitHub, https://github.com/SonoSofisms/ears-eyes

Publications by the team Lindborg

- PM, Caiola V, Chen M, Ciuccarelli P, Lenzi S (2023/09, in review). “A Meta-Analysis of Project Classifications in the Data Sonification Archive ”. J Audio Engineering Society.

- Lenzi S, Lindborg PM, Han NZ, Spagnol S, Kamphuis D, Özcan E (2023/09). “Disturbed Sleep: Estimating Night-time Sound Annoyance at a Hospital Ward”. Proc European Acoustics Association.

- Lindborg, PM, Lenzi S & Chen M (2023/01). “Climate Data Sonification and Visualisation: An Analysis of Aesthetics, Characteristics, and Topics in 32 Recent Projects”. Frontiers Psych 13.

- Lenzi S, Sádaba J, and Lindborg PM (2021). “Soundscape in Times of Change: Case Study of a City Neighbourhood During the COVID-19 Lockdown.” Frontiers Psych 12:412.

- Lenzi S & Ciuccarelli P (2020). “Intentionality and design in the data sonification of social issues.” Big Data and Society.

GRF #11605622, 2022/23

PI :: PerMagnus Lindborg (City University of Hong Kong)

Co-Is :: Francesco Aletta (University College London, UK), Kongmeng Liew (Nara Institute of Science and Technology, Japan), Yudai Matsuda (CityU, HK), Jieling Xiao (City University of Birmingham, UK).

Project website :: http://soundlab.scm.cityu.edu.hk/mmhk/

Abstract

The sensory cultural heritage, combining tangible and intangible heritage, creates identity and cohesion in a community. In urban research, analysis of everyday-ish and informal customs typically rely on visual images, texts, and archival materials, to describe the multifarious aspects of culturally significant places and practices. By contrast, the acoustic environment is often not part of the narrative, and very rarely is the olfactory environment recorded. Given the contemporary context of rapid and profound transformation in Hong Kong, essential threads of the city fabric risk being neglected, and might even disappear before they can be documented. Can we really claim to know urban places without thoroughly considering, and documenting, the sensory cultural heritage represented by sounds and smells?

Our project seeks to preserve the threatened environment of some of Hong Kong’s signature sites and create a more accurate and richer understanding of culturally important places, rituals, and social practices, allowing greater appreciation of the heritage. In the meantime, this project aims to shed light on the crossmodal relationships between urban landscape, soundscape, and smellscape.

We propose a multimodal research approach that takes sound and smell as core components of the immersive urban experience. The project will document a large sample of characteristic sites in Hong Kong, focusing on places for Street food (街頭小食), Chinese Temples (寺廟 [佛祖, 天后…]), and Wet markets (傳統市場). The database will be open-access via a dedicated project website, connecting with recently initiated international soundscape–smellscape projects. It will contribute to the current need for detailed documentation of the local cultural heritage; support interdisciplinary collaborations; be a significant resource for future longitudinal studies of urbanism in Hong Kong; and a reference point for cross-cultural studies with other cities.

Methodology-wise, we will develop a capacity to systematically collect and analyse data from complex physical environments, integrating sonic and olfactory measurements with video capture and narratives. Field data will be both objective and subjective, to include 360 ̊ video, 3D audio (Ambisonics), and ‘smellprints’ (gas chromatography-mass spectrometry analysis of air samples), as well as sensory walks with observers making structured annotations of the perceived visual, auditive, and olfactory environment, and interviews with stakeholders. The database generated in the project will serve further research in environmental psychology, multimodal perception, and sensory integration. It will also prepare the ground for future multisensorial applications in virtual tourism, art, games, film, and spatial design at museums, galleries, and commercial venues.

Sorry, no results found...

Your filters produced no results. Try adjusting or clearing your filters to display better results.

People

The Atlas of Maritime Buddhism is an archive of historic evidence for the spread of Buddhism from India to Korea through the seaports of South East Asia from 2 nd century BC-12 th century AD. It is one of 54 cultural atlases developed and maintained under the Electronic Cultural Atlas Initiative (ECAI) administered by University of California, Berkeley (UCB). With contributions from researchers around the world, it includes geospatial coordinates, gazetteers for hundreds of sites, images of archaeological sites and artifacts, religious and geopolitical empires and zones of influence, inscriptions and transcriptions of Sanskrit texts, historic maps, accounts by Buddhist monks and ambassadors, records of trade, hydrographic data, monsoon records, and shipwreck datasets.

Given its tremendous heterogeneity the Atlas requires a new form of visual, cartographic and time-space narrative strategy that is currently outside traditional forms of interpretation. This is what the The Digital Atlas of Maritime Buddhism will provide. The aim of the proposed research is to develop a pioneering narrative-driven deep mapping schema for interactively exploring the narrative patterns, historical processes and cultural phenomena in the Atlas. This schema will afford pathways for multiple users to undertake different journeys through this rich and fascinating material through the experimental development and application of the world's first deep mapping data browser—a navigational interface to be developed in a 360-degree 3D (omnidirectional) virtual environment.

The research is being conducted by Jeffrey Shaw in collaboration with UNSW, Australia, University of California, Berkeley, Fudan University, and École polytechnique fédérale de Lausanne.

EXHIBITION RECORD

2021/05/16 - 2026/05/30 : Fo Guang Shan Buddha Museum Gallery, Kaohsiung, Taiwan

2021/07/07 - 2021/10/03 : Indra and Harry Banga Gallery, City University of Hong Kong, Hong Kong, China

PROJECTS

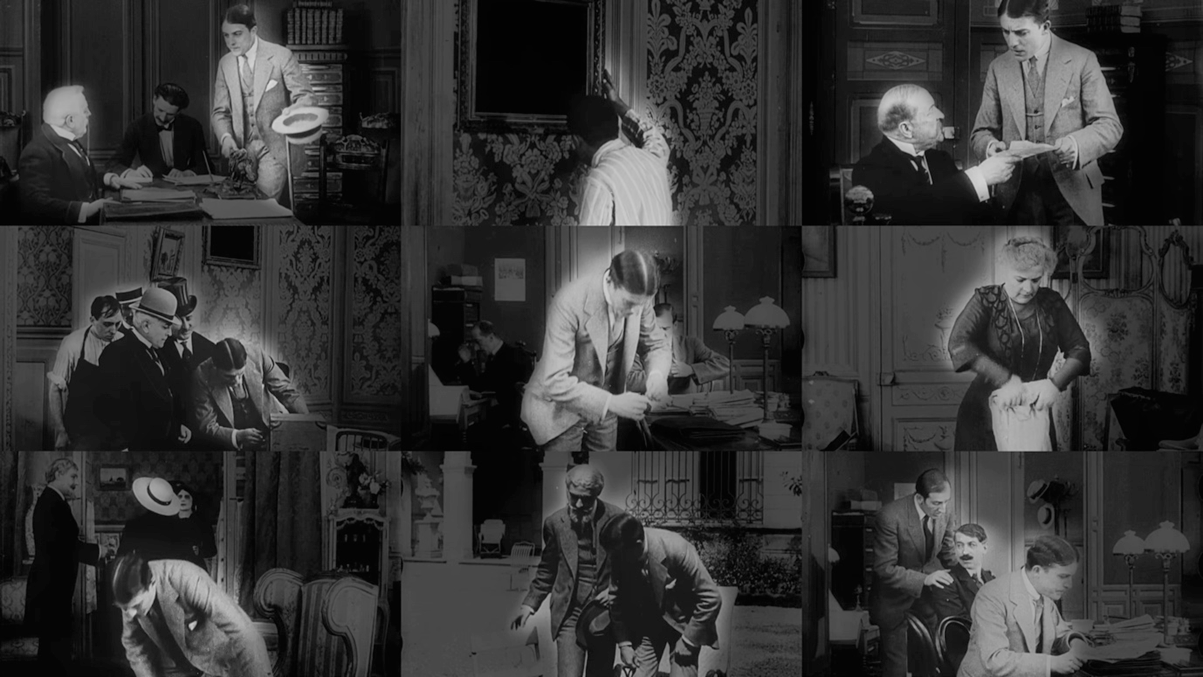

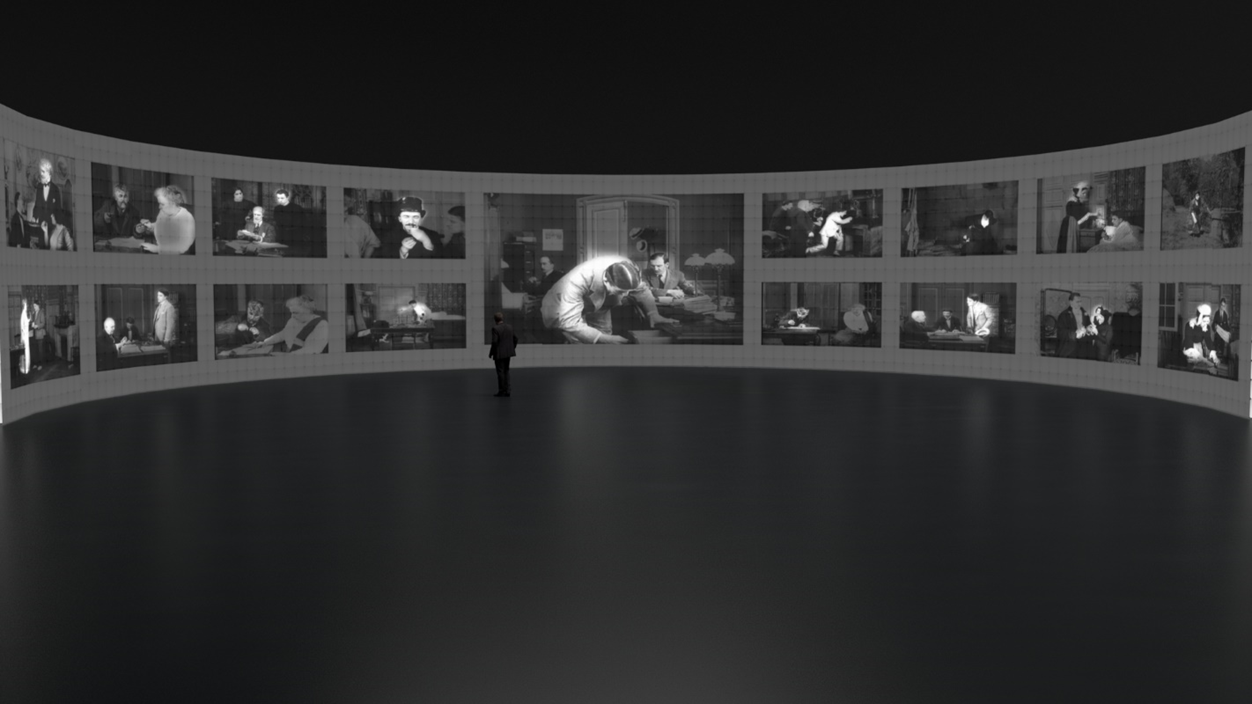

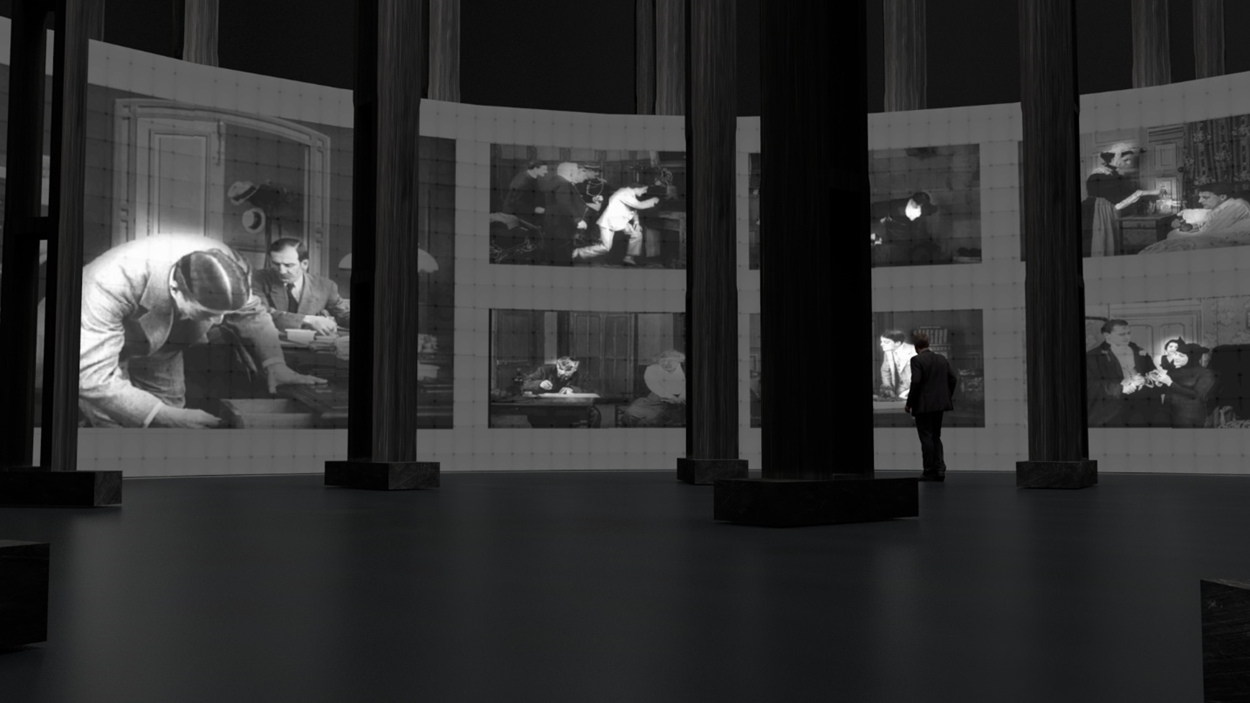

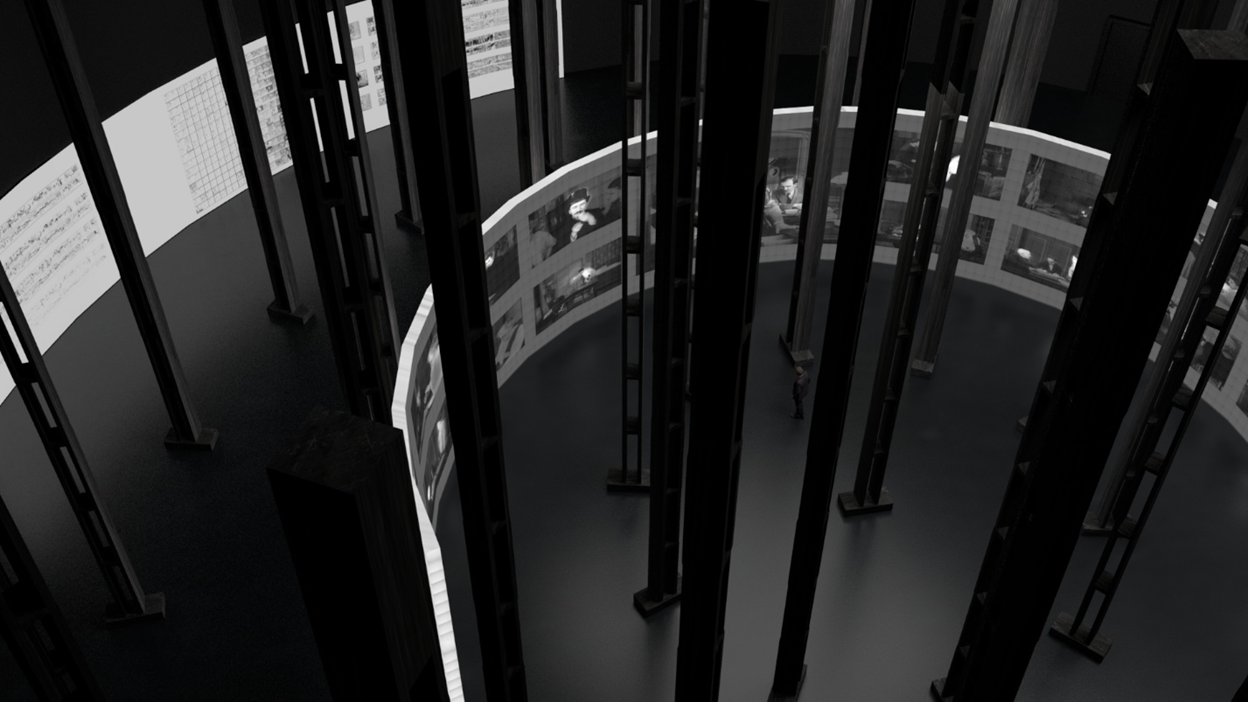

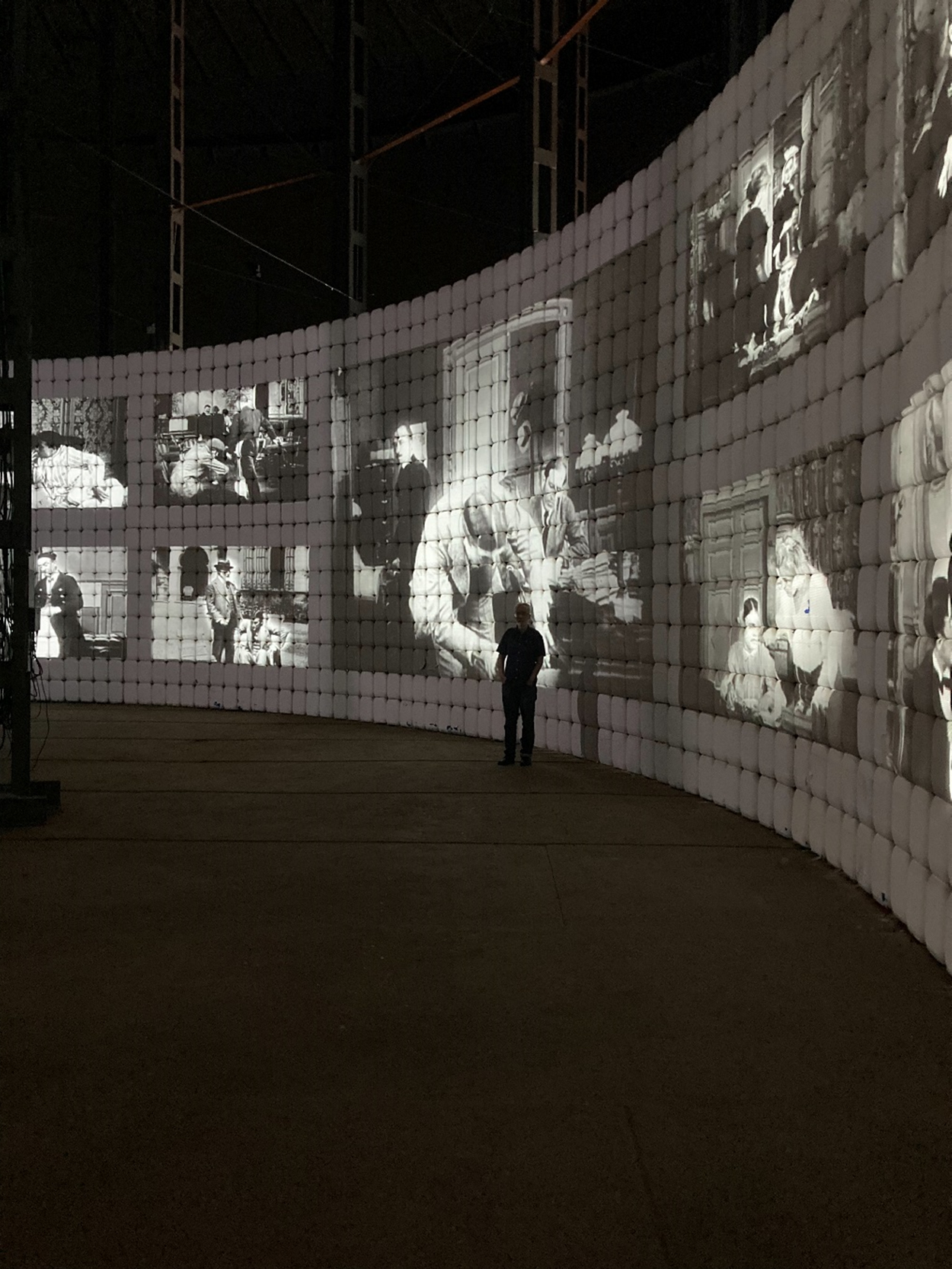

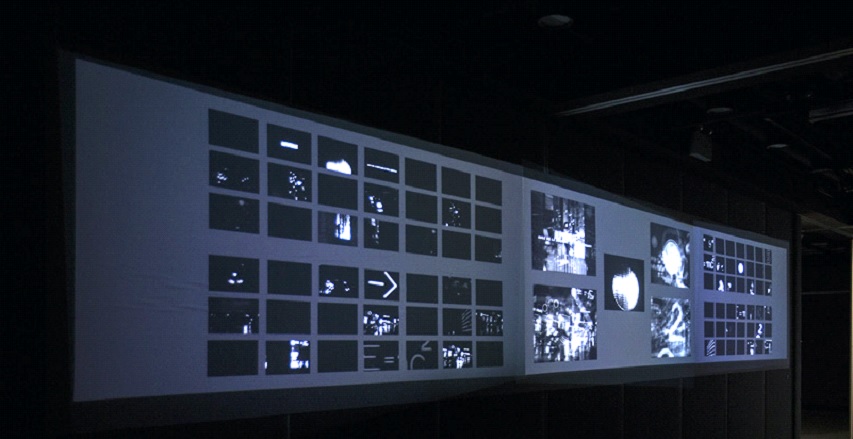

Hidden Networks is a large-scale eight-channel video installation that applies machine learning techniques to the analysis of the moving image. A system of deep neural networks analyzes the optical flow in a dataset of silent films directed by Louis Feuillade and identifies scenes with similar motions: scenes in which the figures move in the same direction, with the same speed, or with the same rhythm. The work was selected by a jury convened by the government of the Canary Islands for presentation at the El Tanque Cultural Centre in Tenerife.

A video documentation of the installation can be found at https://vimeo.com/581860190

The following website introduces the concept of the work:

http://concept-script.com/hidden_networks/index.html#

Image gallery

3D model used in the production of the work, these images are the actual installation in the El Tanque Cultural Centre.

Recent Exhibition

Héctor Rodríguez, Hidden Networks (solo exhibition), El Tanque Cultural Center (Tenerife, Spain), 3 July, 2021.

For more information about the exhibition, please visit

http://www.gobiernodecanarias.org/cultura/eltanque/eventos/RedesOcultas

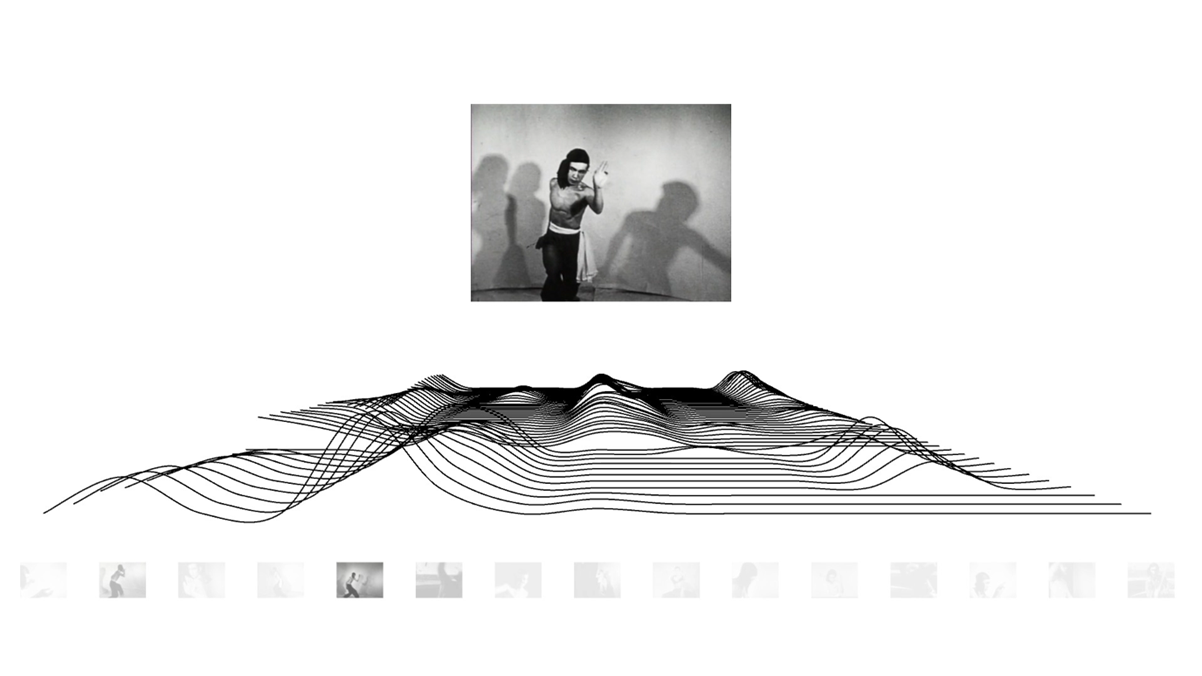

A project about the automatic analysis and visualization of motion in the cinema. A newly designed machine learning algorithm decomposes the movement in every sequence of a movie into a set of elementary motions. These elementary motions are then recombined to produce a reconstruction of the visible movement in the sequence. The analysis and reconstruction are displayed as a two-channel video installation. The visualization of the movement uses a variant of the streakline method often employed in fluid dynamics.

Shown at: Neural Information Processing Systems NEURLPS, December 9, 2020.

http://www.aiartonline.com/highlights-2020/hector-rodriguez-3/

This video uses deep learning and archetypal analysis methods to analyze and visualize the rhythmic flow of Maya Deren's 1948 film Meditation on Violence, made in collaboration with Chinese martial artist Chao-Li Chi (Ji Chaoli).

Shown at: Conference on Computer Vision and Pattern Recognition (CVPR) 2021: Computer Vision Art Gallery, 19 June 2021

https://computervisionart.com/pieces2021/deep-archetypes/

|

|

Sorry, no results found...

Your filters produced no results. Try adjusting or clearing your filters to display better results.

People

PROJECTS

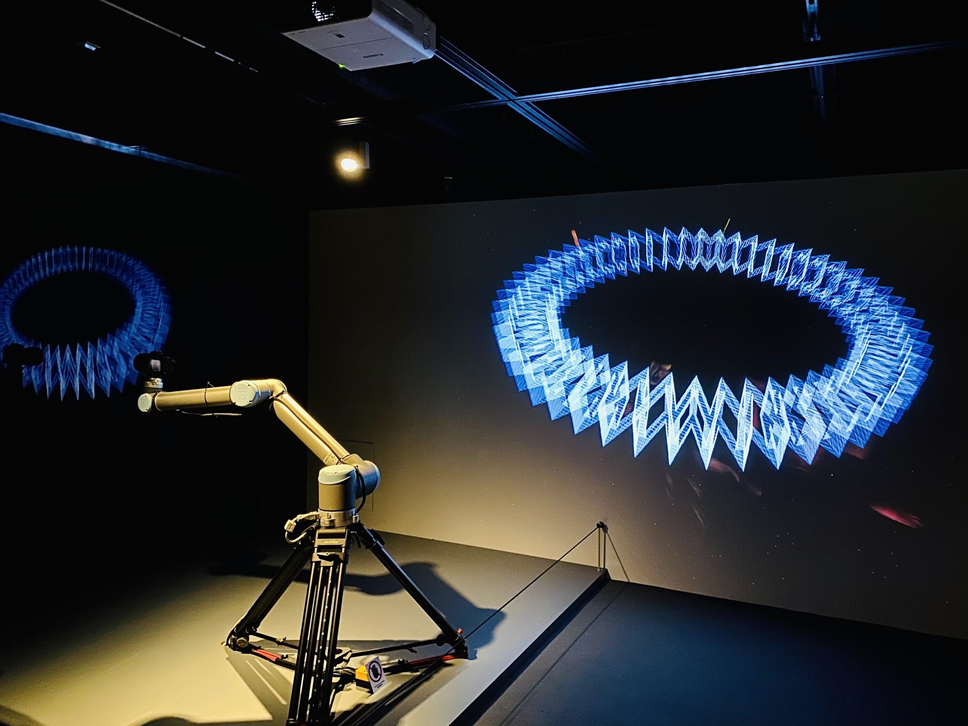

Impression Machine is a media art project based on robotics and kinetic photography. In this project, we have developed a new system and used a highly experimental approach to create a new visual experience for the audience. The work is embodied as a "performative" installation comprising a 6-axis robot arm, a digital camera, a computer and two screens (a 60" TV and a 3m wide projection). The system/machine/installation is performing while the audience is invited to observe a sequence of events, then comprehend the reasons and meanings behind the abstract visuals presented.

The camera is mounted on the robot arm, moving in the space while taking long exposure photographs. On the TV, a set of contour lines are displayed and captured by the camera. As the camera motion and the displayed contour lines are tightly synchronized, the light contours appear to be stacked in an aligned manner in the long exposures, constituting a number of 3D geometric shapes conceived by Leonardo da Vinci. This asks us to rethink how "perspective" is created in a 2D representation (such as a painting or a photograph), and directly responds to Leonardo's study of perspective. Each resultant long exposure photo is displayed on the projection wall. All light contours are real-time rendered and all long exposures are real-time captured on site. This work has developed a technological innovation for new media art embodiment and facilitated a new artistic experience for the audience.

Impression Machine has been shown in "Leonardo da Vinci: Art & Science. Then & Now” Exhibition" during September to December 2019 in CityU Indra and Harry Banga Gallery. 12 contemporary artworks, including Impression Machine, alongside 12 original drawings of Leonardo da Vinci are displayed in the exhibition, celebrating the 500th anniversary of his death. The exhibition has attracted over 11,000 visitors.

The project was partially supported by ACIM Research Fellowship and HKADC Project Grant.

Website: https://www.cityu.edu.hk/bg/exhibitions/leonardo-da-vinci

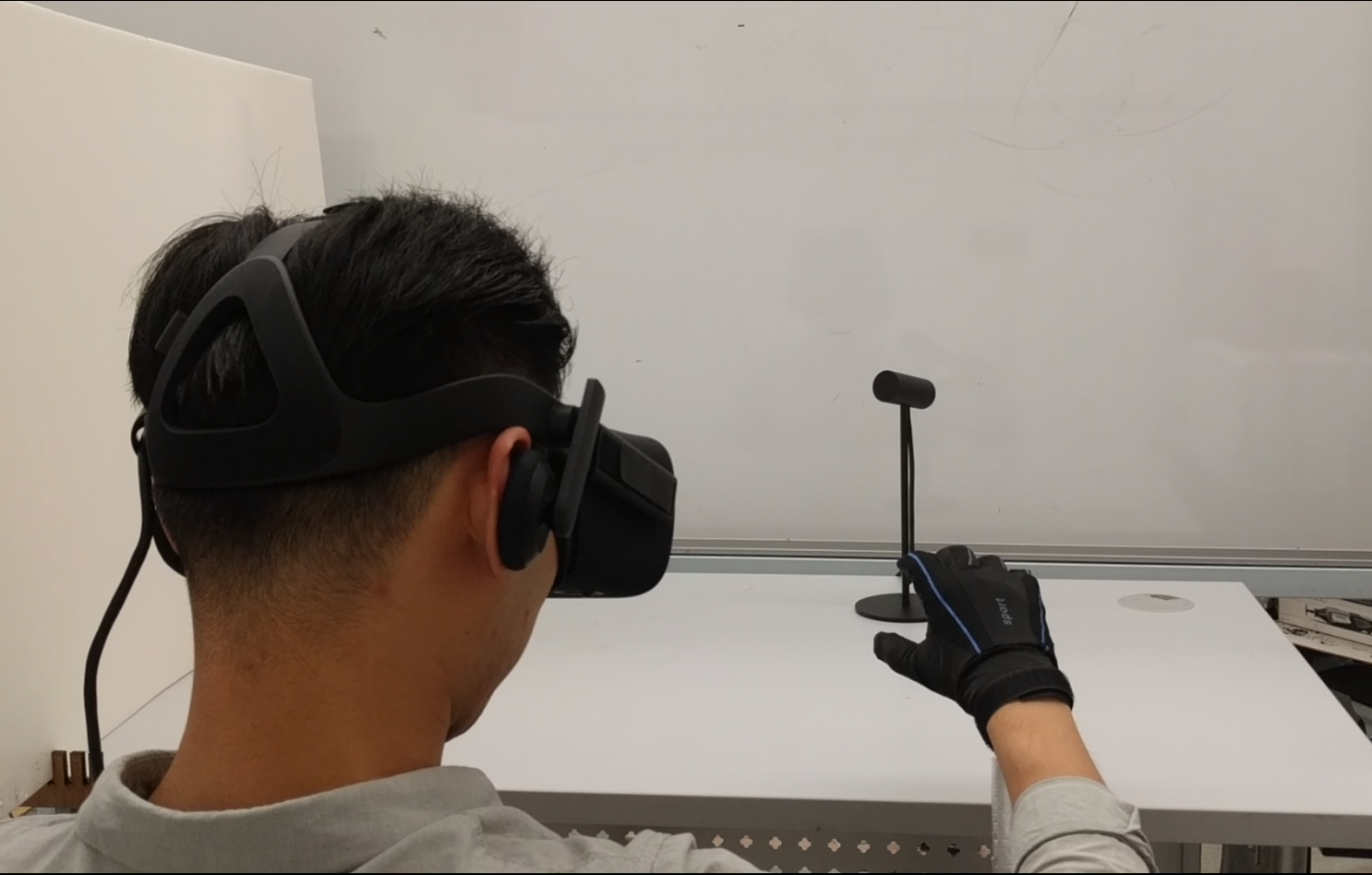

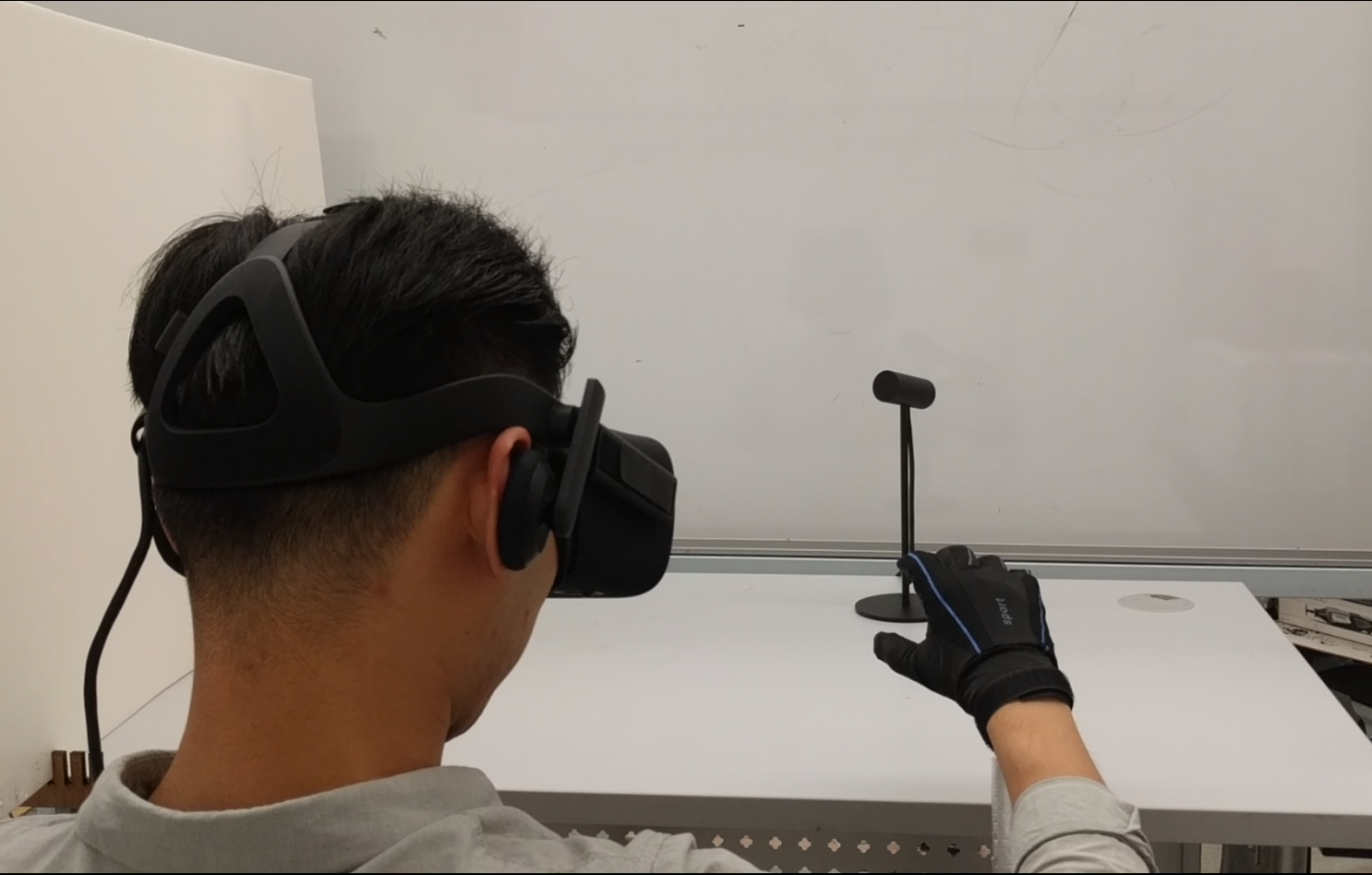

ThermAirGlove (Kening Zhu) is a pneumatic glove that provides thermal feedback to support the haptic experience of grabbing objects of different temperatures and materials in VR. The system consists of a glove with five inflatable airbags on the fingers and the palm, two temperature chambers (one hot and one cold), and the closed-loop pneumatic thermal control system. User studies on VR experience showed that using TAGlove in immersive VR could significantly improve users’ experience of presence compared to the situations without any temperature or material simulation.

Eyes-free Smartwatches (Kening Zhu) expands the interaction space of touch-screen devices (e.g., smartphones and smartwatches) via bezel gestures. While existing works have focused on bezel-initiated swipe on square screens, we investigate the usability of BIS on round smartwatches via six different circular bezel layouts. We evaluated the user performance of BIS on these layouts in an eyes-free situation and found that the performance of BIS is highly orientation dependent, and varies significantly among users. We then compare the performance of personal and general ML models, and find that personal models significantly improve the accuracy for a range of layouts. Lastly, we discuss the potential applications enabled by the BIS.

TipText (Kening Zhu) investigates new text entry techniques using micro thumb-tip gestures, specifically using a miniature QWERTY keyboard residing invisibly on the first segment of the index finger. Text entry can be carried out using the thumb-tip to tap the tip of the index finger. The keyboard layout is optimized for eyes-free input by utilizing a spatial model reflecting the users' natural spatial awareness of key locations on the index finger. Our user evaluation showed that participants achieved an average text entry speed of 11.9 WPM and were able to type as fast as 13.3 WPM towards the end of the experiment. Winner: Best Paper Award, ACM UIST 2019

PI: Kening Zhu

Chen, Taizhou, Lantian Xu, Xianshan Xu, and Kening Zhu (*). "GestOnHMD: Enabling Gesture-based Interaction on the Surface of Low-cost VR Head-Mounted Display". IEEE Transactions on Visualization and Computer Graphics, vol. 27, no. 5, pp. 2597-2607, May 2021, doi: 10.1109/TVCG.2021.3067689.

Low-cost virtual-reality (VR) head-mounted displays (HMDs) with the integration of smartphones have brought the immersive VR to the masses, and increased the ubiquity of VR. However, these systems are often limited by their poor interactivity. In this paper, we present GestOnHMD, a gesture-based interaction technique and a gesture-classification pipeline that leverages the stereo microphones in a commodity smartphone to detect the tapping and the scratching gestures on the front, the left, and the right surfaces on a mobile VR headset. Taking the Google Cardboard as our focused headset, we first conducted a gesture-elicitation study to generate 150 user-defined gestures with 50 on each surface. We then selected 15, 9, and 9 gestures for the front, the left, and the right surfaces respectively based on user preferences and signal detectability. We constructed a data set containing the acoustic signals of 18 users performing these on-surface gestures, and trained the deep-learning classification models for gesture detection and recognition. The three-step pipeline of GestOnHMD achieved an overall accuracy of 98.2% for gesture detection, 98.2% for surface recognition, and 97.7% for gesture recognition. Lastly, with the real-time demonstration of GestOnHMD, we conducted a series of online participatory-design sessions to collect a set of user-defined gesture-referent mappings that could potentially benefit from GestOnHMD.

For more information about the project, please visit https://meilab-hk.github.io/projectpages/gestonhmd.html

PI: Miu Ling Lam

AIFNet is a deep neural network for removing spatially-varying defocus blur from a single defocused image. We leverage light field synthetic aperture and refocusing techniques to generate a large set of realistic defocused and all-in-focus image pairs depicting a variety of natural scenes for network training. AIFNet consists of three modules: defocus map estimation, deblurring and domain adaptation. The effects and performance of various network components are extensively evaluated. We also compare our method with existing solutions using several publicly available datasets. Quantitative and qualitative evaluations demonstrate that AIFNet shows the state-of-the-art performance.

For more information about the project, please visit:

https://sweb.cityu.edu.hk/miullam/AIFNET/

Sorry, no results found...

Your filters produced no results. Try adjusting or clearing your filters to display better results.

People

PROJECTS

ThermAirGlove (Kening Zhu) is a pneumatic glove that provides thermal feedback to support the haptic experience of grabbing objects of different temperatures and materials in VR. The system consists of a glove with five inflatable airbags on the fingers and the palm, two temperature chambers (one hot and one cold), and the closed-loop pneumatic thermal control system. User studies on VR experience showed that using TAGlove in immersive VR could significantly improve users’ experience of presence compared to the situations without any temperature or material simulation.

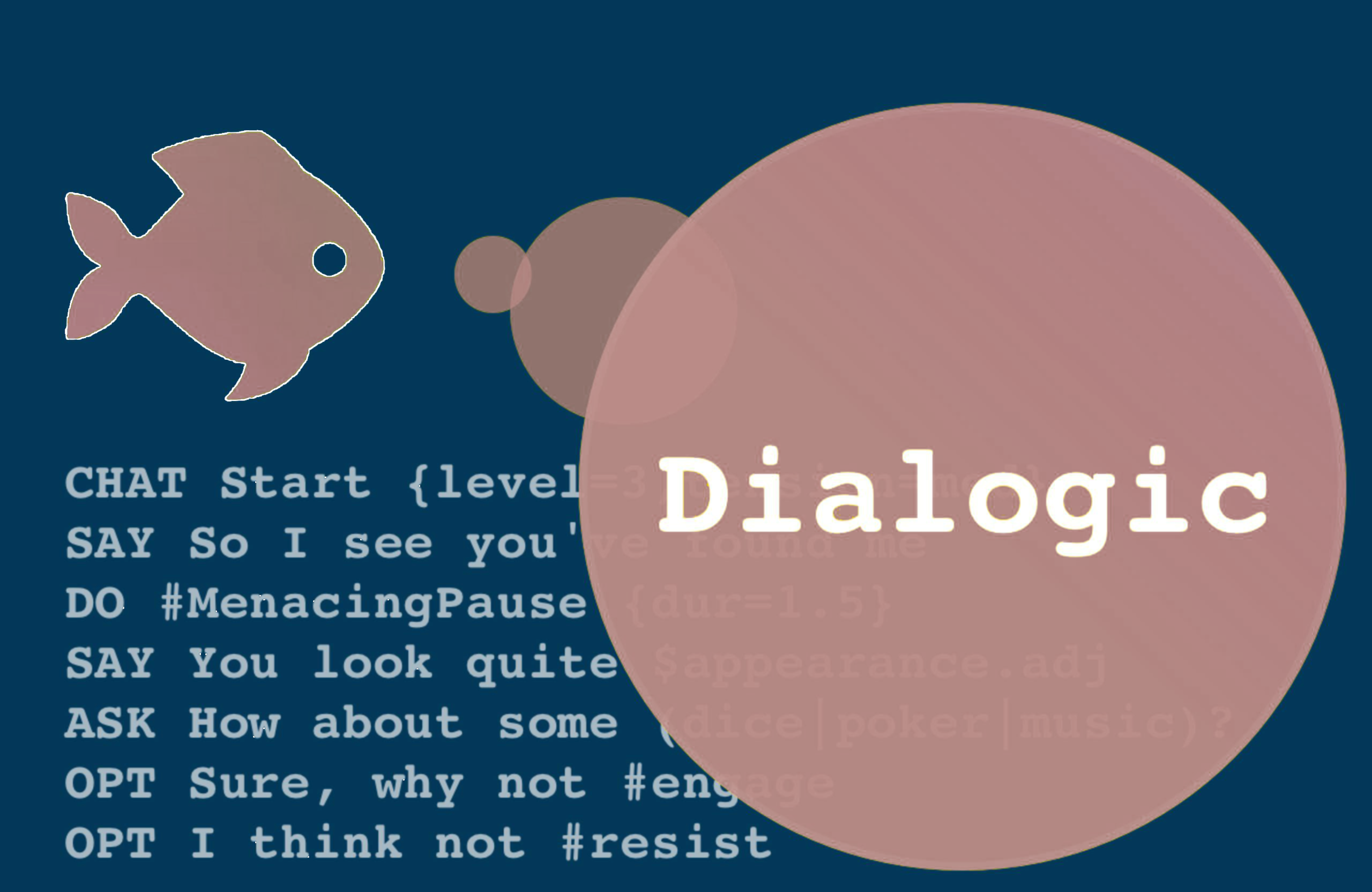

Dialogic (Daniel Howe) is a system designed to help writers easily create interactive scripts with generative elements. It enables writers to craft compelling dialog that responds organically both to user prompts and to events in the environment. The system supports arbitrarily complex interactions between multiple actors, but makes no assumptions about how text is displayed, or about how users will choose their responses. Dialogic was awarded 'Exceptional Paper' and nominated for 'Best Paper' at The International Conference on the Foundations of Digital Games (FDG2020).

Spectre (Daniel Howe) Spectre is an AI-driven interactive installation that reveals the secrets of the digital influence industry as users pray to Dataism and the Gods of Silicon Valley. With the help of 'deep-fake' celebrities, created via machine learning, including Mark Zuckerberg, Marcel Duchamp, Marina Abramović, and Freddie Mercury, Spectre tells a cautionary tale of technology, democracy and society, curated by algorithms and powered by visitors' data. Winner of the 2019 Alternate Realities Commission.

Big Dada (Daniel Howe) features AI-generated 'deep-fake' character studies of Marcel Duchamp, Marina Abramović, Mark Zuckerberg, Kim Kardashian, Morgan Freeman and Freddy Mercury. Big Dada was released on social media in June 2019 and quickly went viral, leading to global press coverage and confused responses from Facebook and Instagram regarding their policies on computational propaganda.

Eyes-free Smartwatches (Kening Zhu) expands the interaction space of touch-screen devices (e.g., smartphones and smartwatches) via bezel gestures. While existing works have focused on bezel-initiated swipe on square screens, we investigate the usability of BIS on round smartwatches via six different circular bezel layouts. We evaluated the user performance of BIS on these layouts in an eyes-free situation and found that the performance of BIS is highly orientation dependent, and varies significantly among users. We then compare the performance of personal and general ML models, and find that personal models significantly improve the accuracy for a range of layouts. Lastly, we discuss the potential applications enabled by the BIS.

TipText (Kening Zhu) investigates new text entry techniques using micro thumb-tip gestures, specifically using a miniature QWERTY keyboard residing invisibly on the first segment of the index finger. Text entry can be carried out using the thumb-tip to tap the tip of the index finger. The keyboard layout is optimized for eyes-free input by utilizing a spatial model reflecting the users' natural spatial awareness of key locations on the index finger. Our user evaluation showed that participants achieved an average text entry speed of 11.9 WPM and were able to type as fast as 13.3 WPM towards the end of the experiment. Winner: Best Paper Award, ACM UIST 2019

Sorry, no results found...

Your filters produced no results. Try adjusting or clearing your filters to display better results.

People

Sorry, no results found...

Your filters produced no results. Try adjusting or clearing your filters to display better results.

People

PROJECTS

In June 2018, Chinese Scientists were able to 3D print ceramics in microgravity using lunar dust, thereby continuing 10,000 year tradition of exploration in the use of new materials, novel craftsmanship methods and technological innovation in the development of Chinese Ceramics.

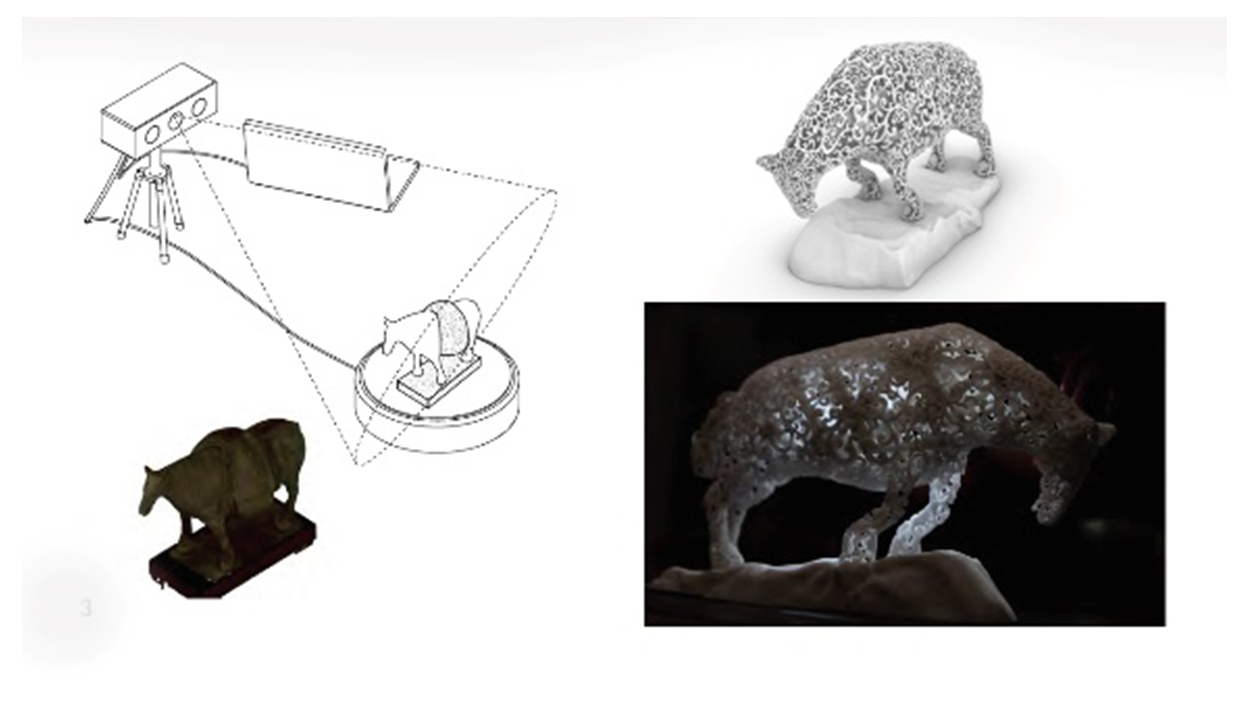

In contrast in the field of conservation and preservation of cultural artefacts, 3D printing is used not to innovate but to replicate either part of damaged artefacts or entire pieces constructed from 3D scans of the originals. The resulting surrogates are often criticized for their inauthenticity. If used only as a replicating technology, 3D printing currently seems to be unable to transfer essential aspects of the craft and material ontology of cultural artefacts such as tool marks, material haptics and the patina of objects which together create the so-called “aura” of cultural objects made by hand.

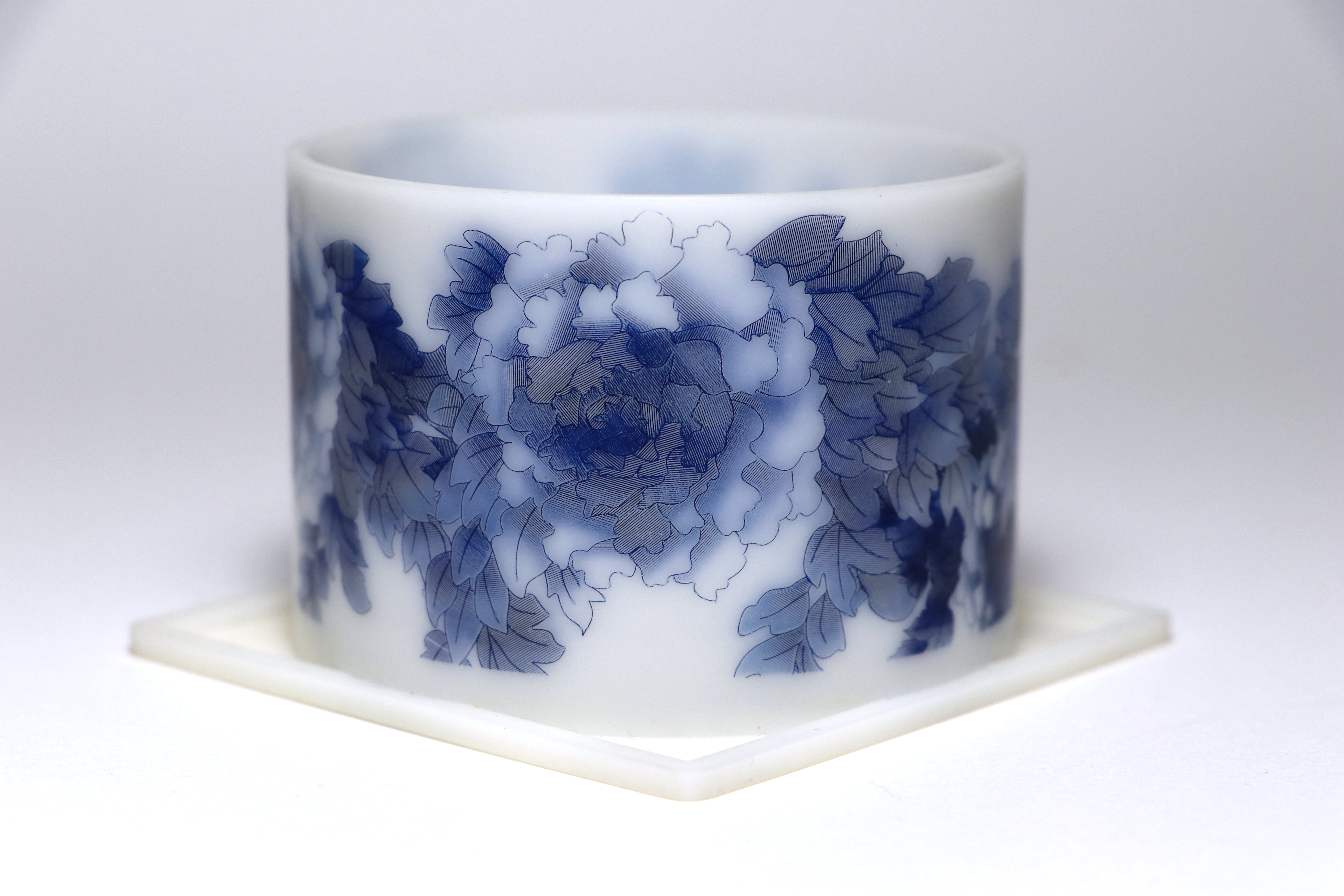

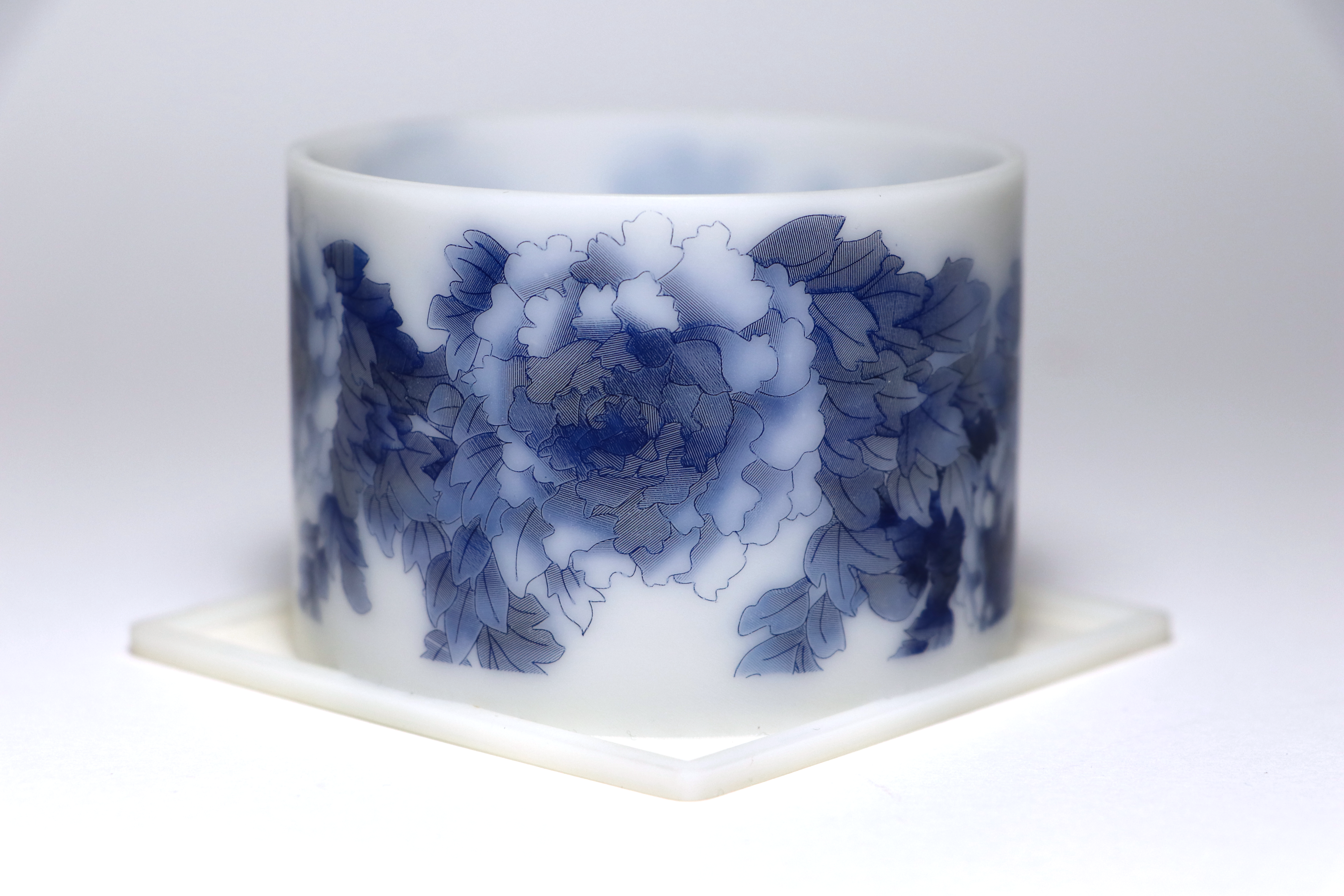

This research project started with ceramic glazings and their translation into new surfaces

for 3D printing. Now, supported with a second GRF grant, and in collaboration with The Hong Kong University Museum and Art Gallery (UMAG), we continue to investigate transfer methods for Chinese Ceramics. We combine scientific, historical, cultural and technical research to create authenticity in 3D printing that contributes both to the development of digital craftsmanship, to cultural heritage, and to museum collection development and management.

This extended research articulates new forms and material expressions for 3D printing based on the

analysis and adaptation of traditional ceramic production procedures. It includes the modification of the toolpath for 3D printers, new transfer methods from 3D scanned traditional crafted ceramic artefacts to 3D objects, and the development of specific production methods for their 3D printing. This will allow the creation of a transfer of the traditional craft into new ceramics, based on 3D scans of selected artefacts within UMAG’s study collection and lead to a hybridization of the traditional qualities historic artefacts through 3D scanning, and ultimately establish a novel form of museum collection.

Grants

GRF: Science + Technology + Arts (STArts) Digital Craftsmanship in Art and Design - Chinese Ceramics (9043267) $217,889

GRF: New Media Ceramics – Analysis and Methodical Transfer of Craftsmanship Techniques from Chinese Ceramic Painting to the Development of New Glazing for 3D Printed Substrates (9042734) $329,890

3D UV activated surface of FDM printed single curved geometry via custom made CNC 3 axis laser application with a precision of 0.05 mm articulating brush strokes used in Chinese ceramic glazes.

3D UV activated surface of FDM printed double curved geometry via custom made CNC 3 axis laser application.

The Tang Dynasty Tomb Figures transitioning Chinese Ceramics into critical design (Inventory Number: HKU.C.1953.0038), Student example of the first collaboration with UMAG

Glass Entanglement VII, Exhibited at the Solo Museum exhibition, Metamorphosis or Confrontation, University Museum and Art Gallery 20th May - 20th November 2020. The work is placed on a lacquer carved table from the museum collection, articulating a digital craftsmanship genealogy bridging 300 years - highlighting the relation between traditional and contemporary methods in craft.

Exhibitions

Metamorphosis or Confrontation

Curators: Klein, T., Knothe, F. & Kraemer, H., Retrospective Solo Exhibition of the works of Tobias Klein at the University Museum and Art Gallery (UMAG), Hong Kong, 20th May - 6th Dec. 2020

Identities & Fractures

Curators: Klein, T. & Kraemer, H., Solo Exhibition at the Goethe-Institut Hong Kong, Hong Kong, 12th Dec. 2019 - 25th Jan. 2020

Through the Looking-Glass

Exhibition at PRS Asia 2019, examination of the PhD, Osage Gallery, Hong Kong, 20 – 25 September 2019

Conferences

ISEA 2018, ISEA 2019

SIGGRAPH 2018, SIGGRAPH 2020, SIGGRAPH 2021

Sorry, no results found...

Your filters produced no results. Try adjusting or clearing your filters to display better results.

People