This project targets to improve the viewing experience of 360-degree stereoscopic VR content by exploiting the human vision system (HVS) and the constant field of view characteristic in VR. We rely on our binocular vision system to fuse the two images displayed by the VR headset to create a stereoscopic mental picture. However, many people have slightly unequal refractive power between their eyes, and this might result in a sub-optimal stereo experience. Therefore, we propose an asymmetric approach which applies different degrees of image detail enhancement to the left and right eye images as an improvement measure. As modern VR headset allows the user to freely change the viewing angle but the field of view is kept constant at about 120 degrees; this means only a limited portion of the 360-degree image is visible to the user at any moment. To further refine the VR viewing experience, we propose to make the detail enhancement process view-dependent such that it dynamically adapts to the visible image content.

The GPU image detail enhancement algorithm used in this project is introduced by Mike wong in 2017 (published in GPU Zen: Advanced Rendering Techniques)

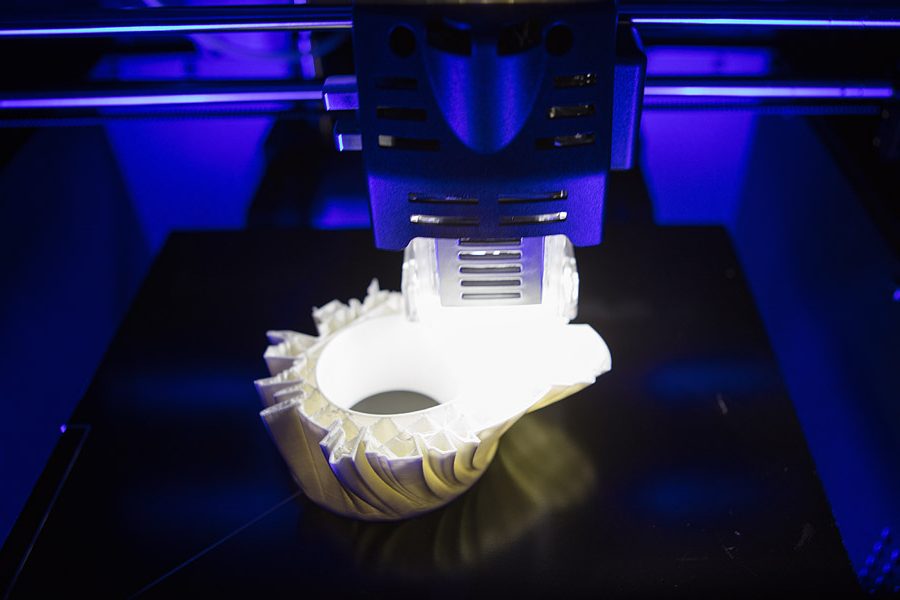

An asymmetrically enhanced 360-degree stereoscopic image sample (Original photo from the insta360 image samples archive)

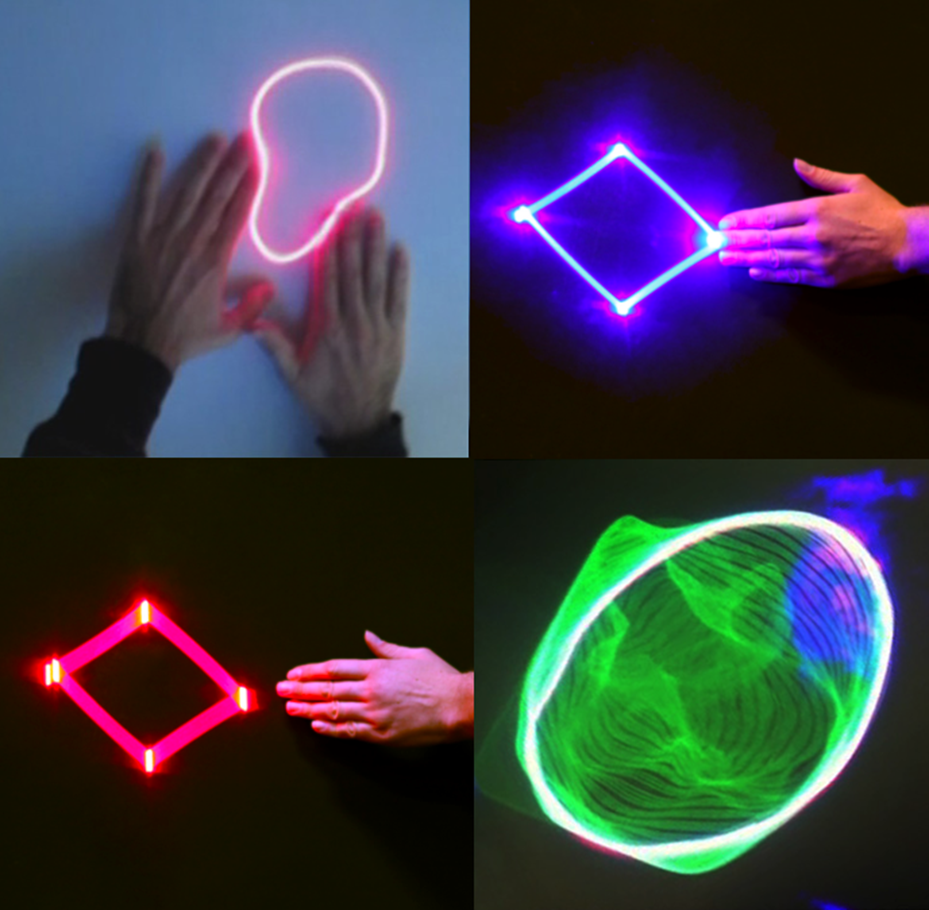

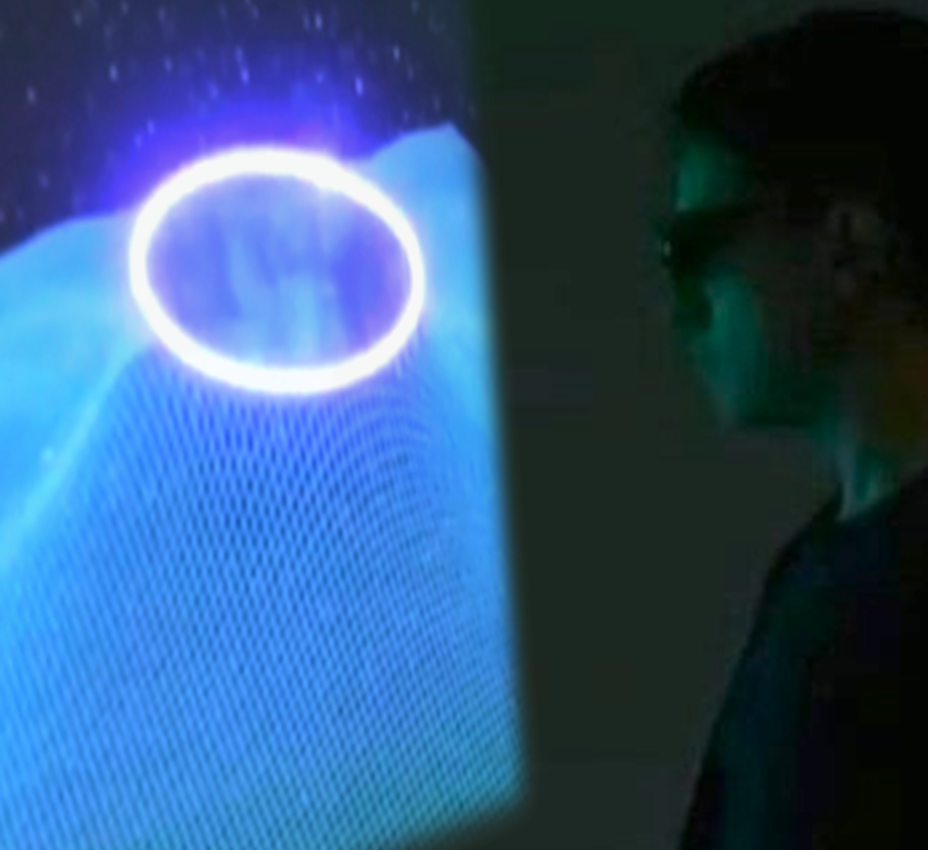

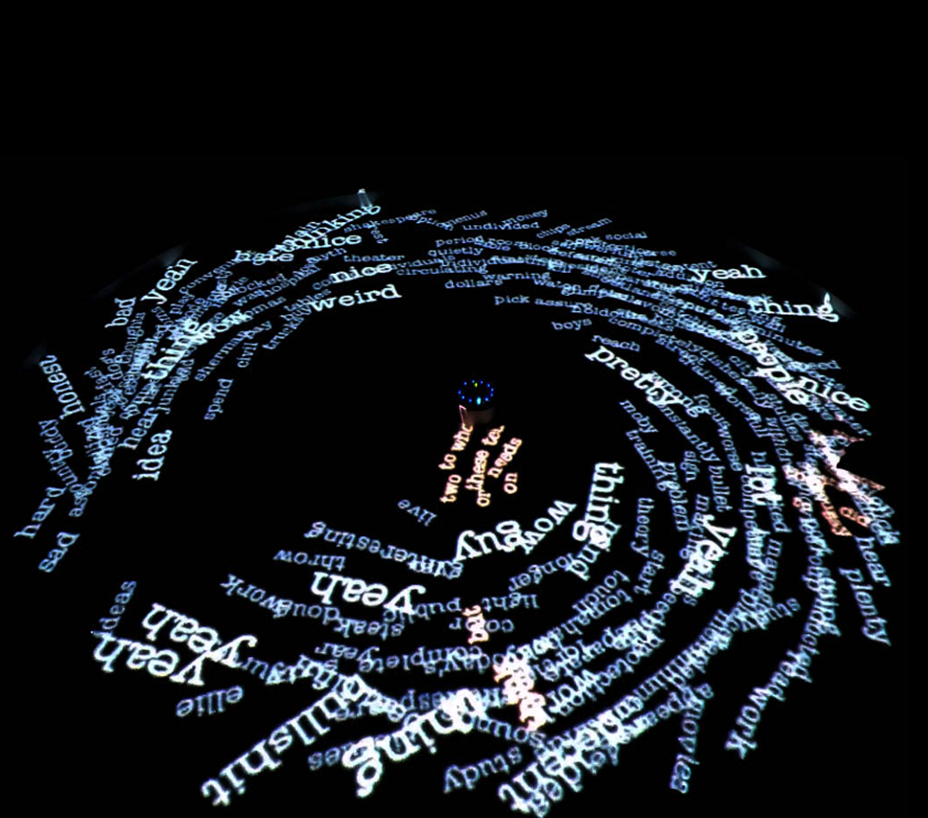

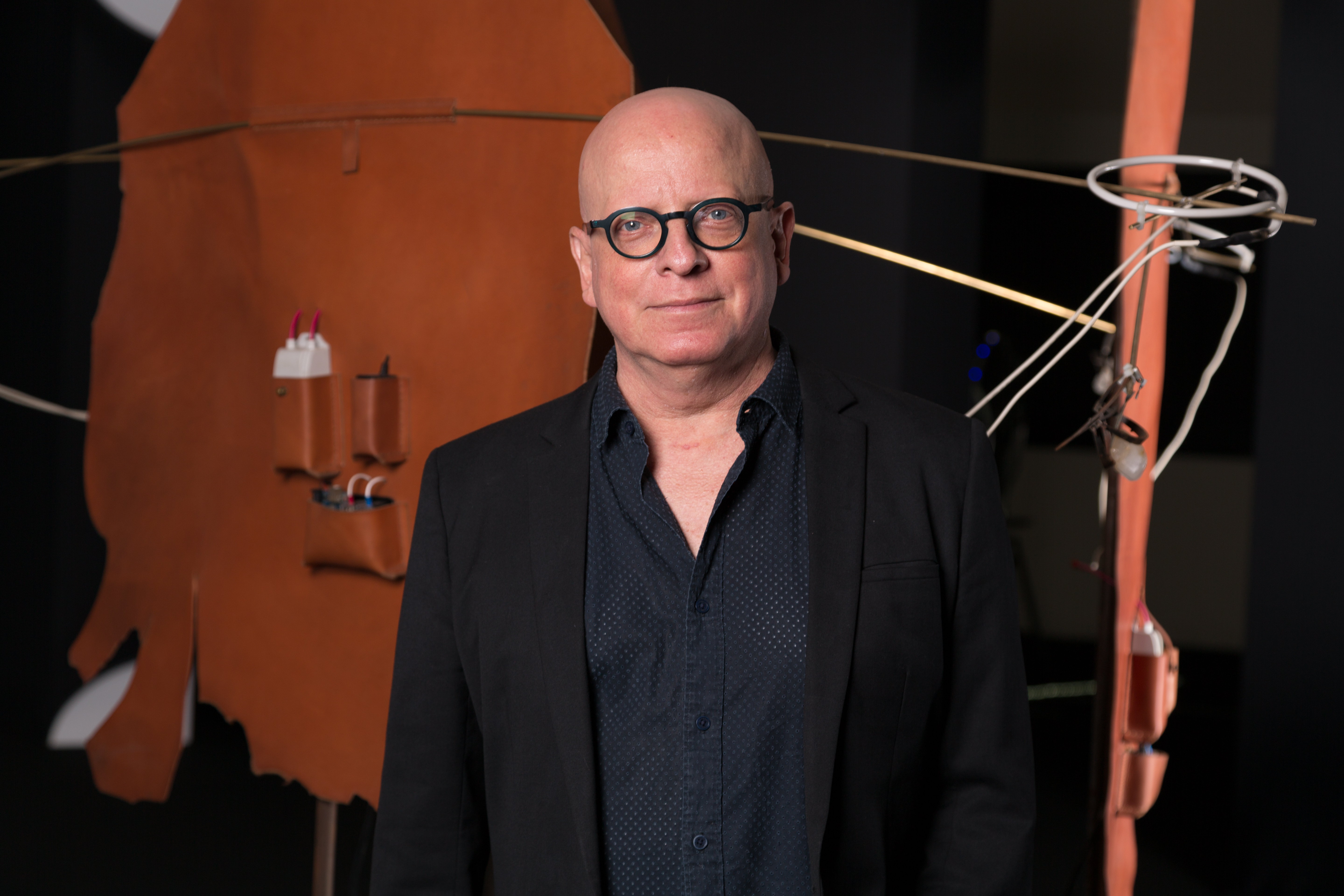

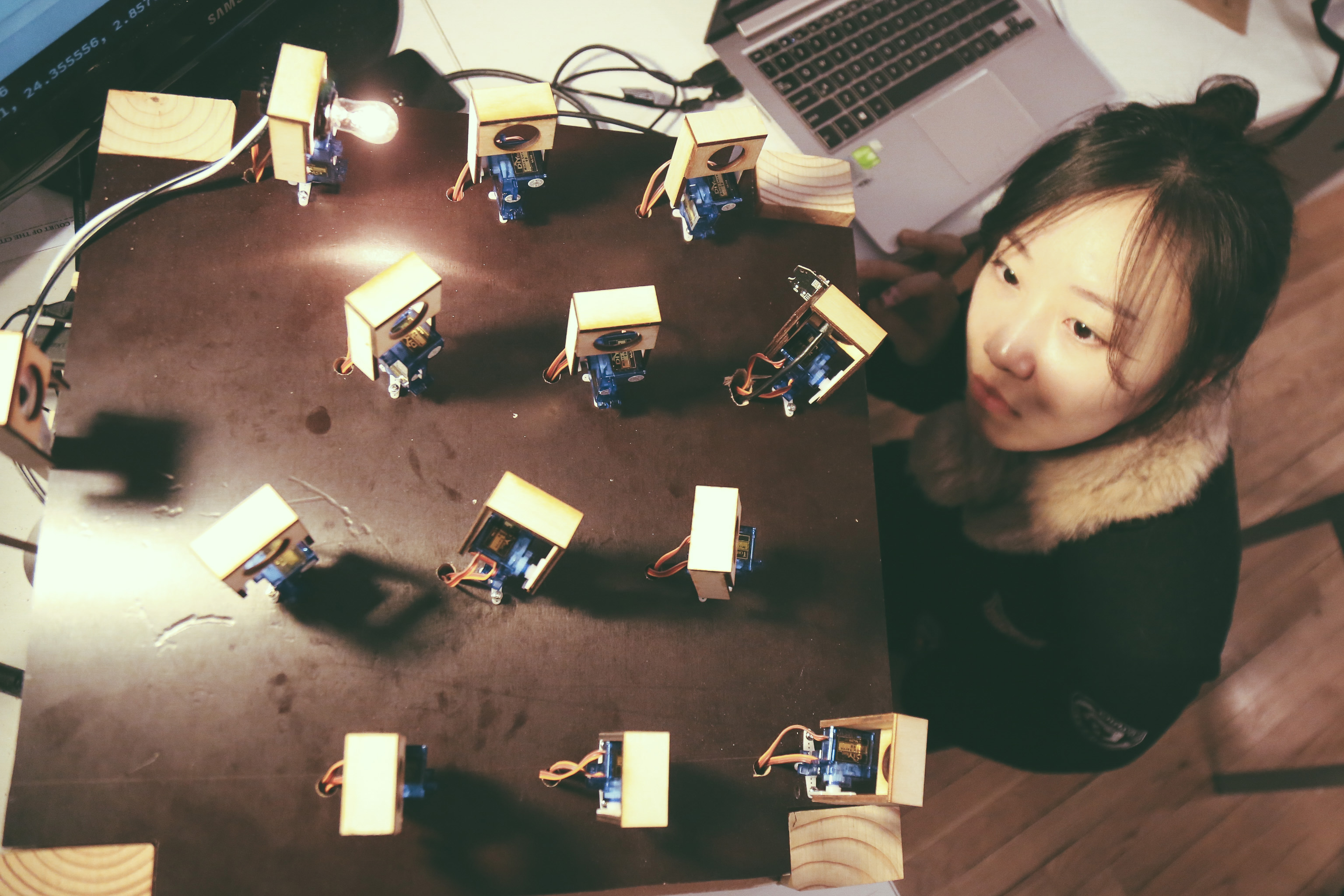

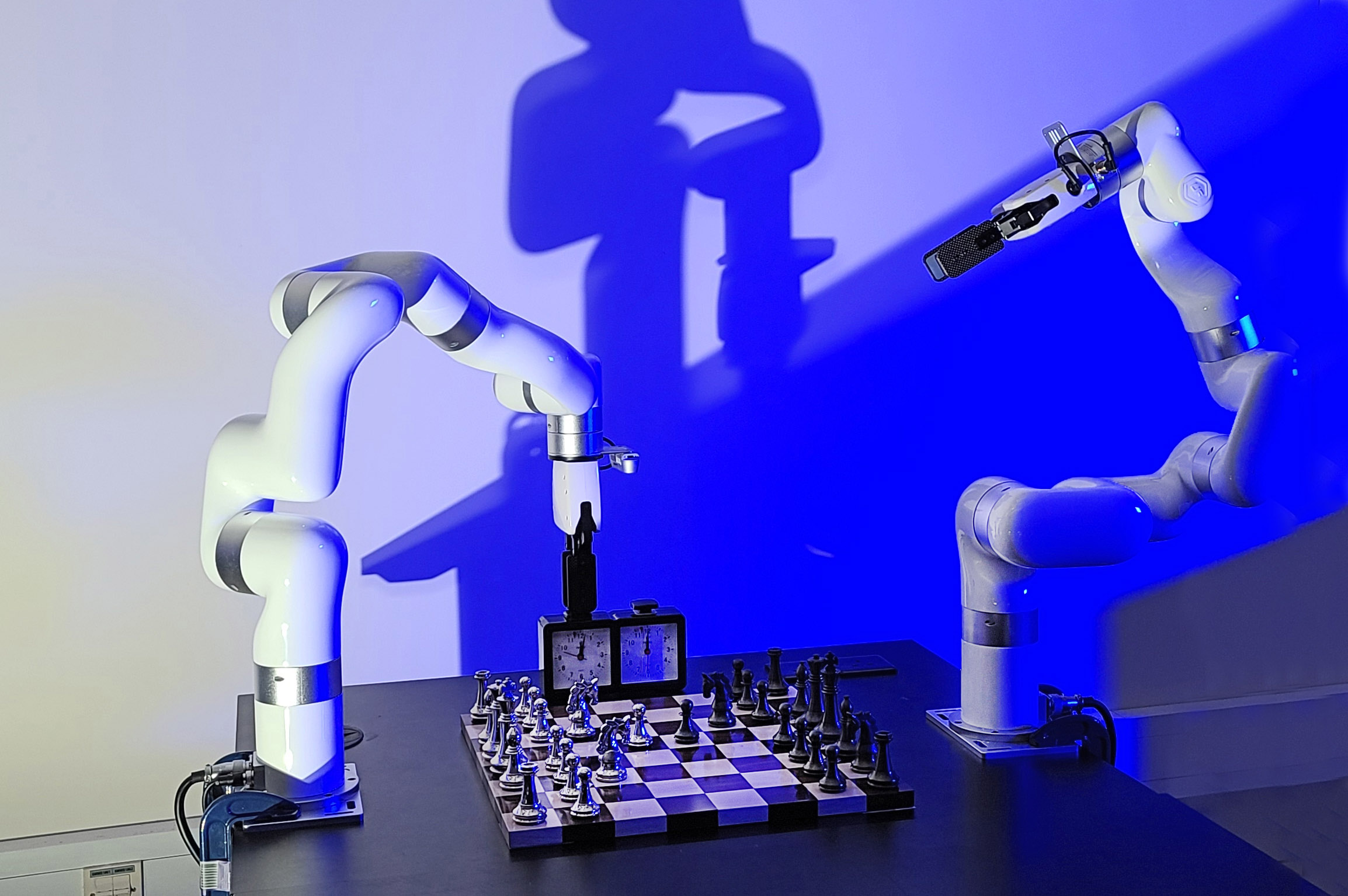

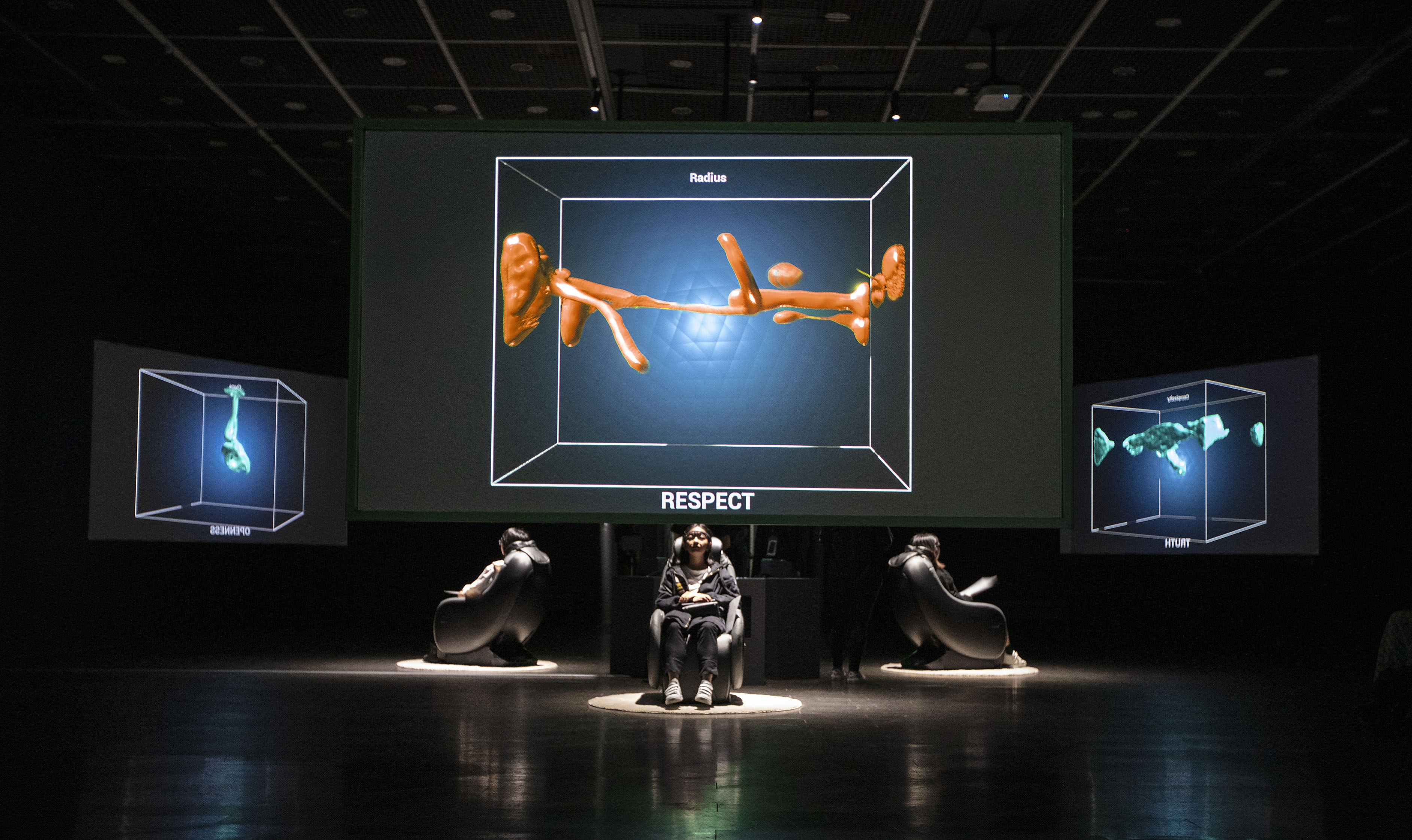

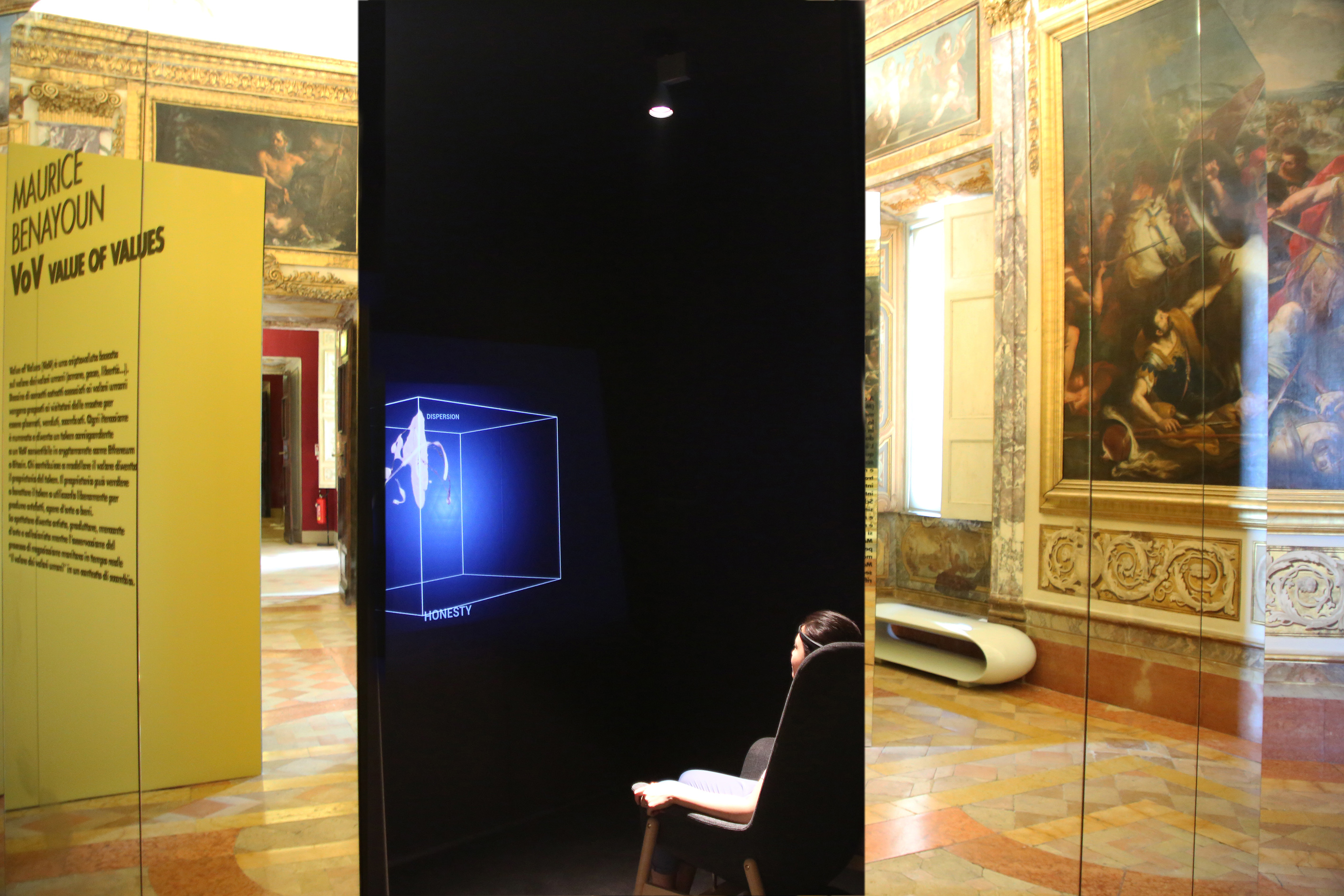

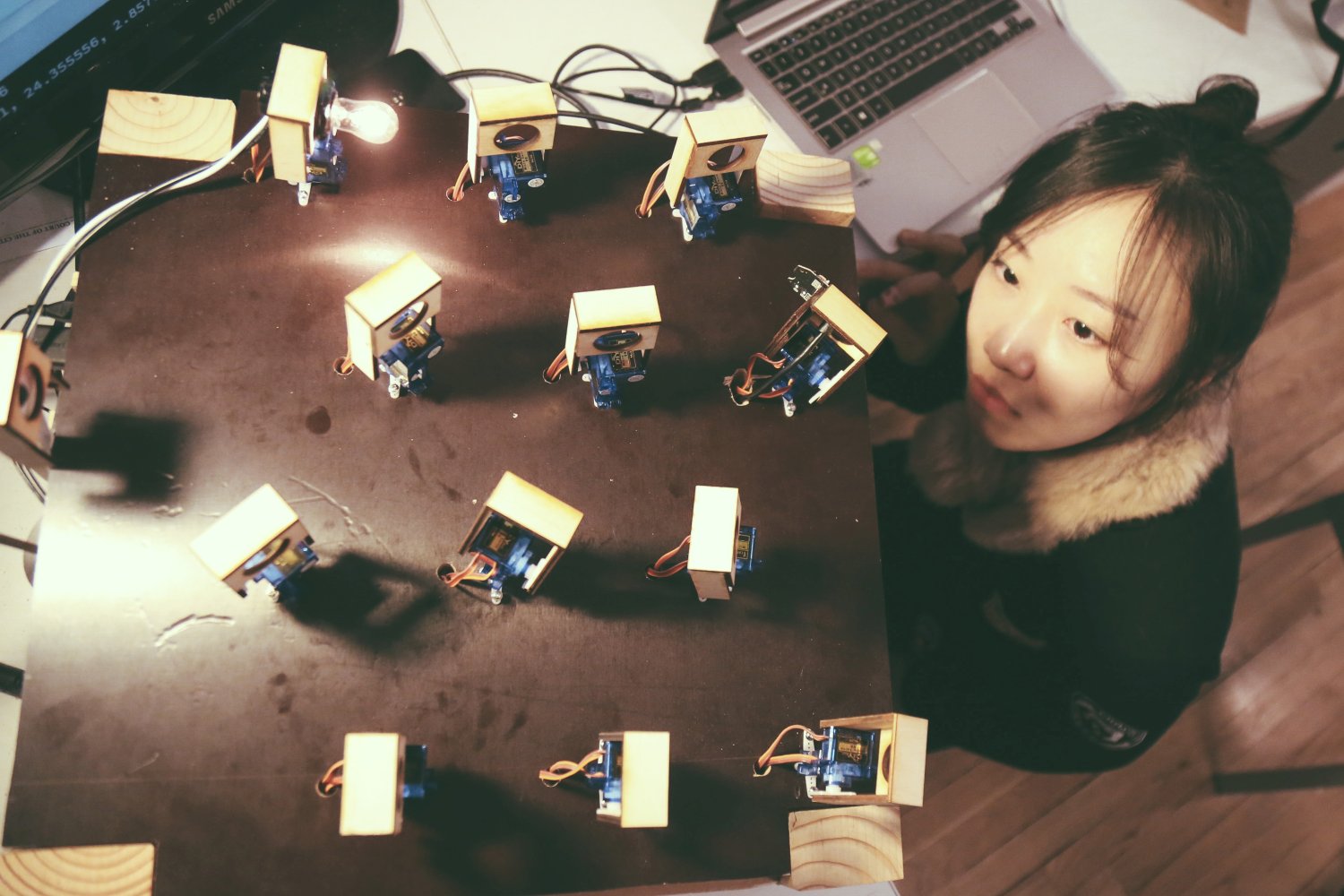

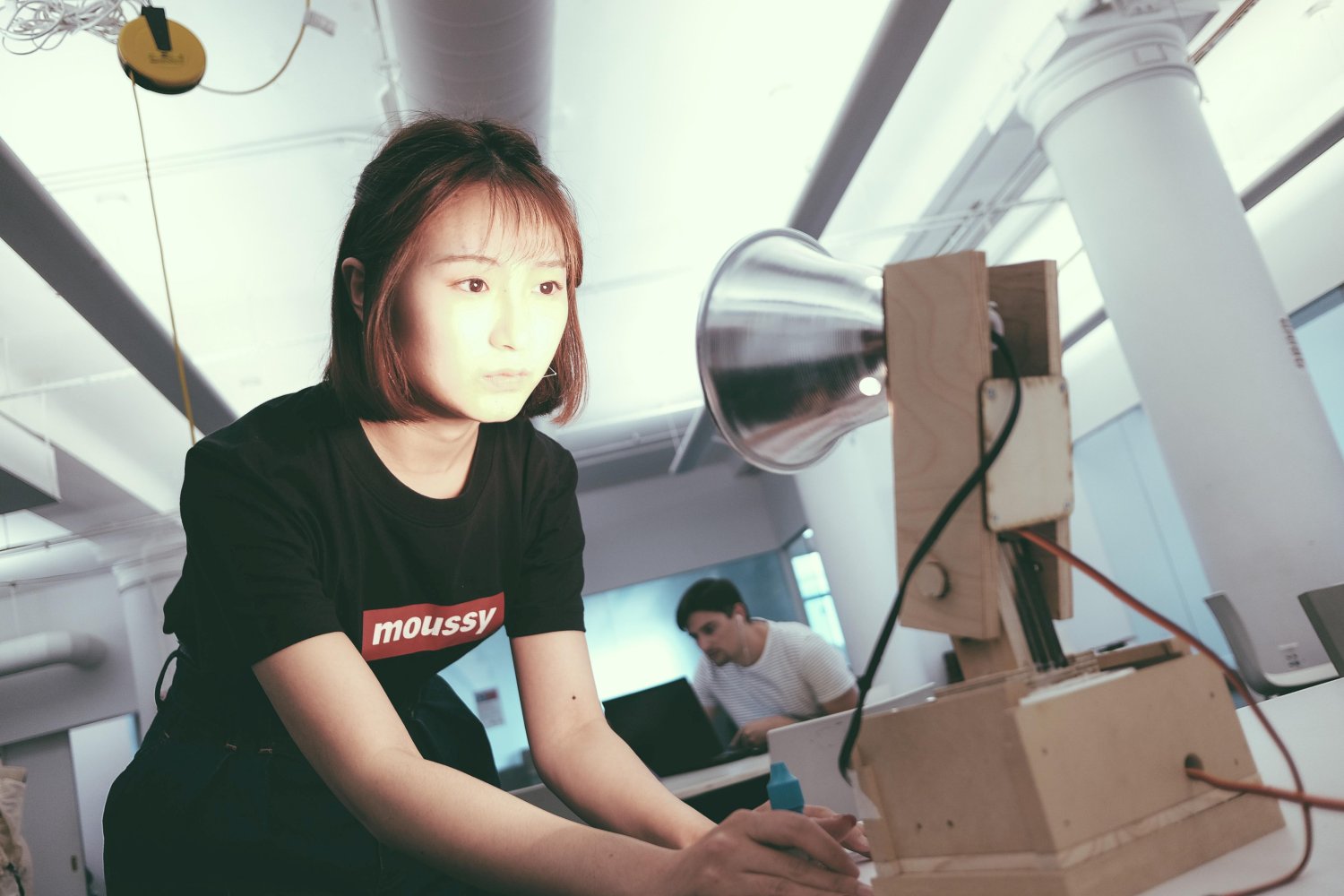

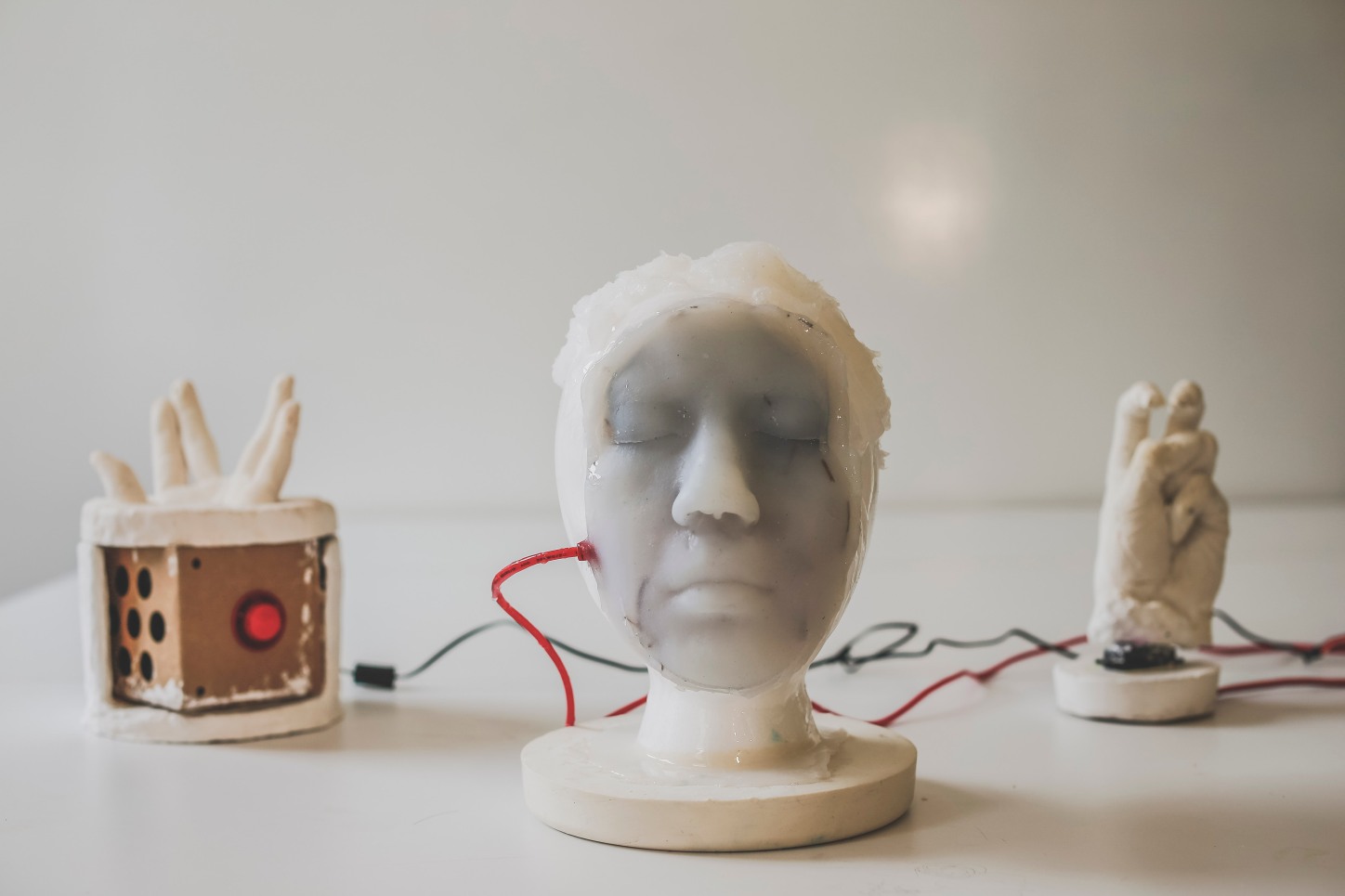

Study machine movement gestures to affect human perception and behavior, using both physical and virtual testing strategies. This has resulted in work investigating perception of robot-robot dynamics during game play, signaling robot personality through appearances like clothing, using robotics to aid self-identification psychologically, investigating multi-robot signalling with observers, prototyping strategies using video and VR for human-robot interaction. On-going projects include Social Rejection in Robot-Robot-Human Interaction, using video and robot arms to study how group dynamics affect interpretation of being rejected by robots; AI as Active Writer, with Dr. Toby Li (Notre Dame) on human perception of collaborative expressive machine writing agent on a digital platform; Presentation of Self in Machine Life on human-machine dance performance through virtual and physical telepresence and expressive gestures of a robot arm; Select on moral responses of digital game characters; Connected Gaze on audience-machine collaboration using interactive gestures in telepresence spaces for performing arts. We would also love to collaborate with Dr. Alvaro Cassinelli on the machine emotional gestures projects.

Key publications:

- LC R, Benayoun M, Lindborg PM, Xu HS, Chan HC, Yip KM, Zhang TY. (2021) “Power Chess: Robot-to-robot nonverbal emotional expression applied to competitive play.” ACM ARTECH 2021: Proceedings of the 10th International Conference on Digital and Interactive Arts. Alveiro, Portugal: 13-15 October. ACM, NYC.

- Friedman N, Love K, LC R, Sabin JE, Hoffman G, Ju W. (2021) “What Robots Need From Clothing.” In ACM Designing Interactive Systems Conference (DIS’21). June 28-July 2.

- LC R, Alcibar A, Baez A, and Torossian S. (2020) “Machine Gaze: Self-Identification Through Play With a computer Vision-Based Projection and Robotics System.” Frontiers in Robotics and AI: Human-Robot Interaction.

- LC R. (2021) “Now You See Me, Now You Don’t: Revealing personality and narratives from playful interactions with machines being watched.” Proceedings of the 15th International Conference on Tangible, Embedded, and Embodied Interaction (TEI’21).

- Zamfirescu-Pereira JD, Sirkin D, Goedicke D, LC R, Friedman N, Mandel I, Martelaro N, Ju W. (2021) “Fake It to Make It: Exploratory Prototyping in HRI.” Companion Proceedings of the 2021 ACM IEEE International Conference on Human-Robot Interaction (HRI’21).

Key personnel:

- RAY LC, Hongshen Xu, Zijing Lillian Song, Toby Li (collab).

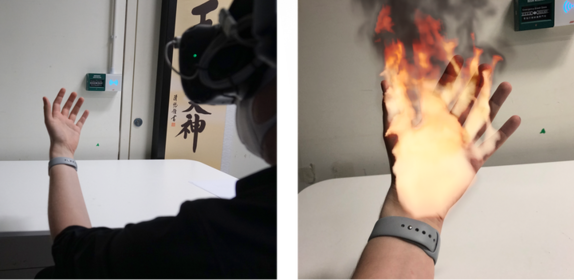

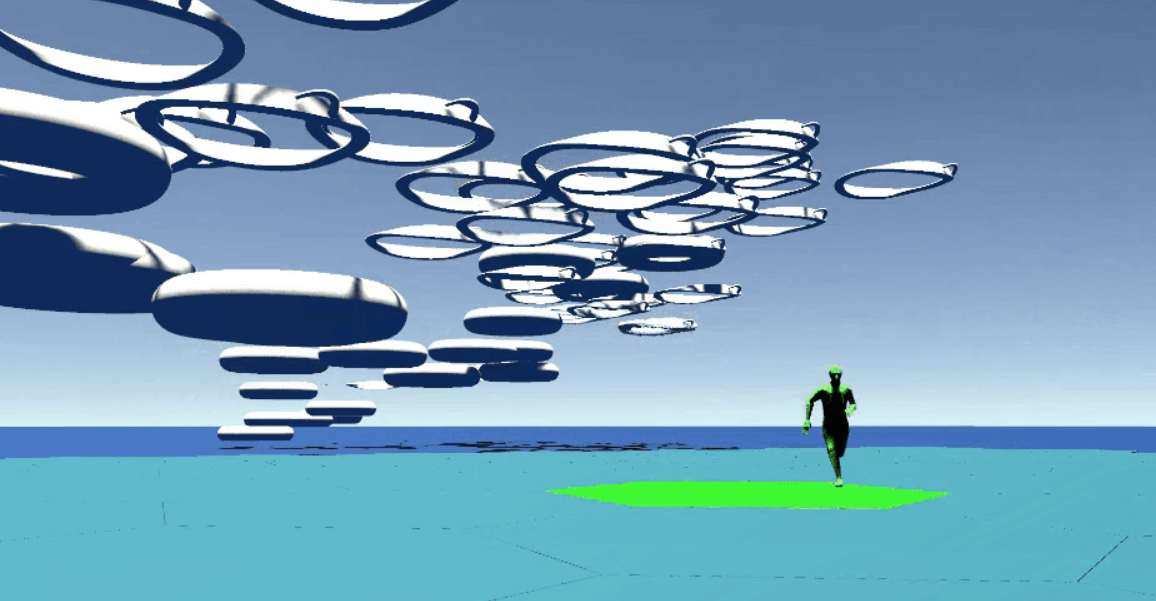

Applying digital (VR) and physical environments for human collaborative purposes, enhancing human-machine collaborative work, or inducing emotional responses or rehabilitating human mental illness. This has resulted in work looking at how human-robot interactions change based on seating arrangement, mixed reality approaches to active game play, VR spaces for systematic desensitization therapy approaches in speech therapy, comparison of digital and physical interventions in augmented spaces. On-going projects include Configuration of Space, with Dr. Zhicong Lu (CityU CS) on the way digital spaces like Ohyay uses configurations to change human-human collaboration and drives changes in perception; Virtual Diagnostics, with Dr. Julian Lai (CityU Psyc) on the use of VR games to assess potential fear of intimacy and isolation mental disorders in youth populations; Empathy for Refugee in VR, on active navigation in navigating refugee environments for fostering empathy; Weaved Wearable Sensors for VR, using tangible wearable components for detecting stress and anxiety in the context of VR rehabilitation.

Key publications:

- LC R, Friedman N, Zamfirescu-Pereira JD, and Ju W. (2020) “Agents of Spatial Influence: Designing incidental interactions with arrangements and gestures.” HRI '20 Workshop: The Forgotten HRI: Incidental encounters with robots in public spaces. In 2020 ACM IEEE International Conference on Human-Robot Interaction.

- Liu Y, Si Y, LC R, Harteveld C. (2021) “cARd: Mixed Reality Approach for a Total Immersive Analog Game Experience.” In: Arai K., Kapoor S., Bhatia R. (eds) Proceedings of the Future Technologies Conference (FTC) , Vol. 2. Advances in Intelligent Systems and Computing, vol 1289.

- LC R and Fukuoka Y. (2019) “Machine Learning and Therapeutic Strategies in VR.” ARTECH 2019: Proceedings of the 9th International Conference on Digital and Interactive Arts. Braga, Portugal: 42, 1-6.

- Coutu Y, Chang Y, Zhang W, Sengun S, and LC R. (2020) “Immersiveness and usability in VR: a comparative study of Monstrum and Fruit Ninja.” In Bostan: Game User Experience and Player-Centered Design. International Series on Computer Entertainment, Springer.

- LC R, and Monir F. (2020) “A Case for Play: Immersive Storytelling of Rohingya Refugee Experience.” Media-N Journal of the New Media Caucus. Issue on NEoN Digital Arts Re@ct Social Change Art Technology. Dundee, UK. Online.

Key personnel:

- RAY LC, Lina Zhang, Luoying Lin, Zhicong Lu (collab), Julian Lai (collab).