From the 1970s through the 1990s, the Korean film market, like the markets of many countries around the world, was dominated by Hollywood. The majority of film critics, students, and industry professionals viewed the future of South Korean cinema as bleak. Surprisingly, in 2001, South Korea became the first film industry in recent history to reclaim its domestic market from Hollywood. Since then, South Korean cinema made a history. Indeed, South Korean cinema provides one of the most striking case studies of non-Western cinematic success in the age of the neoliberal world order and Hollywood’s domination in the global film market. What happened to the South Korean film culture between 1992 and 2003? How did what was once an “invisible” cinema become one of the world’s most influential film industries so quickly? And what implications does the South Korean film renaissance have for the ways we approach national and transnational cinema more broadly?

ACR Lab’s project “The South Korean Film and Media Industry” will host an international symposium on the subject and also publish a series of books, journal articles, and special issues. The South Korean Film Industry, the project’s first outcome, is the first detailed scholarly overview of the South Korean film industry. This edited volume maps out a compelling and authoritative vision of how that field may be approached in historical and industrial terms.

Forthcoming Publications

The South Korean Film Industry

Edited by Sangjoon Lee (lead editor), Dal Yong Jin, and Junhyoung Cho. University of Michigan Press (August 2024).

https://press.umich.edu/Books/T/The-South-Korean-Film-Industry2

Published Special Issue

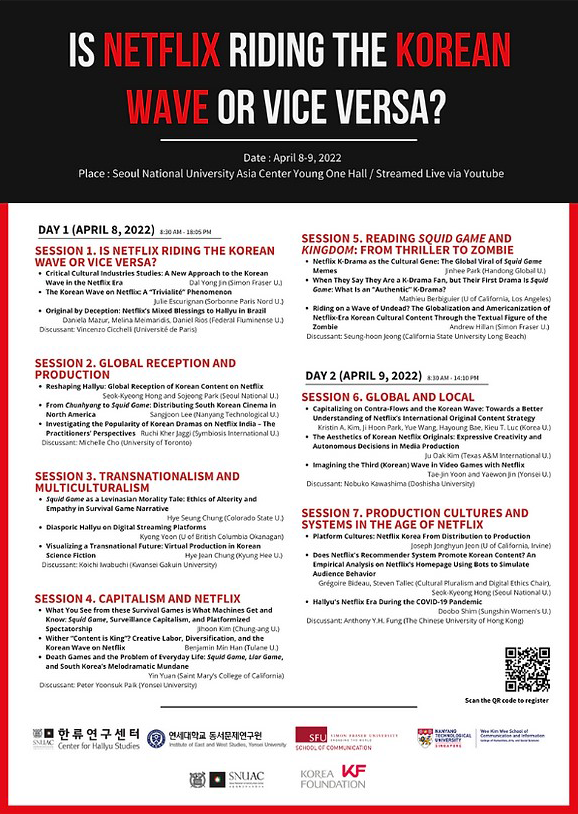

“Is Netflix Riding the Korean Wave or Vice Versa?”

International Journal of Communication 17:1 (November 2023).

Edited by Dal Yong Jin, Sangjoon Lee, and Seok-Kyeong Hong

https://ijoc.org/index.php/ijoc/article/view/20718

Ongoing Project

Netflix and the South Korean Media

Edited by Sangjoon Lee (lead editor), Dal Yong Jin, and Seok-Kyeong Hong

Brill (expected in 2025).