TipText (Kening Zhu) investigates new text entry techniques using micro thumb-tip gestures, specifically using a miniature QWERTY keyboard residing invisibly on the first segment of the index finger. Text entry can be carried out using the thumb-tip to tap the tip of the index finger. The keyboard layout is optimized for eyes-free input by utilizing a spatial model reflecting the users' natural spatial awareness of key locations on the index finger. Our user evaluation showed that participants achieved an average text entry speed of 11.9 WPM and were able to type as fast as 13.3 WPM towards the end of the experiment. Winner: Best Paper Award, ACM UIST 2019

PI: Miu Ling Lam

AIFNet is a deep neural network for removing spatially-varying defocus blur from a single defocused image. We leverage light field synthetic aperture and refocusing techniques to generate a large set of realistic defocused and all-in-focus image pairs depicting a variety of natural scenes for network training. AIFNet consists of three modules: defocus map estimation, deblurring and domain adaptation. The effects and performance of various network components are extensively evaluated. We also compare our method with existing solutions using several publicly available datasets. Quantitative and qualitative evaluations demonstrate that AIFNet shows the state-of-the-art performance.

For more information about the project, please visit:

https://sweb.cityu.edu.hk/miullam/AIFNET/

PI: Kening Zhu

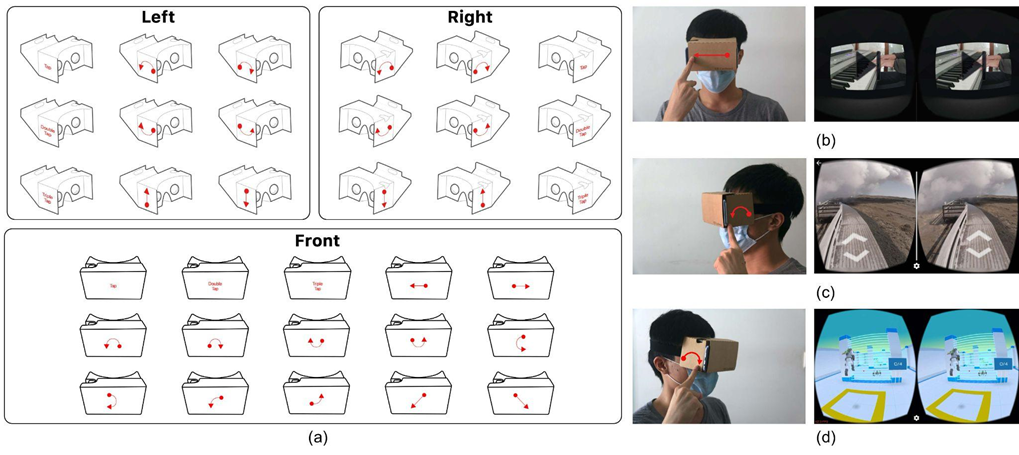

Chen, Taizhou, Lantian Xu, Xianshan Xu, and Kening Zhu (*). "GestOnHMD: Enabling Gesture-based Interaction on the Surface of Low-cost VR Head-Mounted Display". IEEE Transactions on Visualization and Computer Graphics, vol. 27, no. 5, pp. 2597-2607, May 2021, doi: 10.1109/TVCG.2021.3067689.

Low-cost virtual-reality (VR) head-mounted displays (HMDs) with the integration of smartphones have brought the immersive VR to the masses, and increased the ubiquity of VR. However, these systems are often limited by their poor interactivity. In this paper, we present GestOnHMD, a gesture-based interaction technique and a gesture-classification pipeline that leverages the stereo microphones in a commodity smartphone to detect the tapping and the scratching gestures on the front, the left, and the right surfaces on a mobile VR headset. Taking the Google Cardboard as our focused headset, we first conducted a gesture-elicitation study to generate 150 user-defined gestures with 50 on each surface. We then selected 15, 9, and 9 gestures for the front, the left, and the right surfaces respectively based on user preferences and signal detectability. We constructed a data set containing the acoustic signals of 18 users performing these on-surface gestures, and trained the deep-learning classification models for gesture detection and recognition. The three-step pipeline of GestOnHMD achieved an overall accuracy of 98.2% for gesture detection, 98.2% for surface recognition, and 97.7% for gesture recognition. Lastly, with the real-time demonstration of GestOnHMD, we conducted a series of online participatory-design sessions to collect a set of user-defined gesture-referent mappings that could potentially benefit from GestOnHMD.

For more information about the project, please visit https://meilab-hk.github.io/projectpages/gestonhmd.html

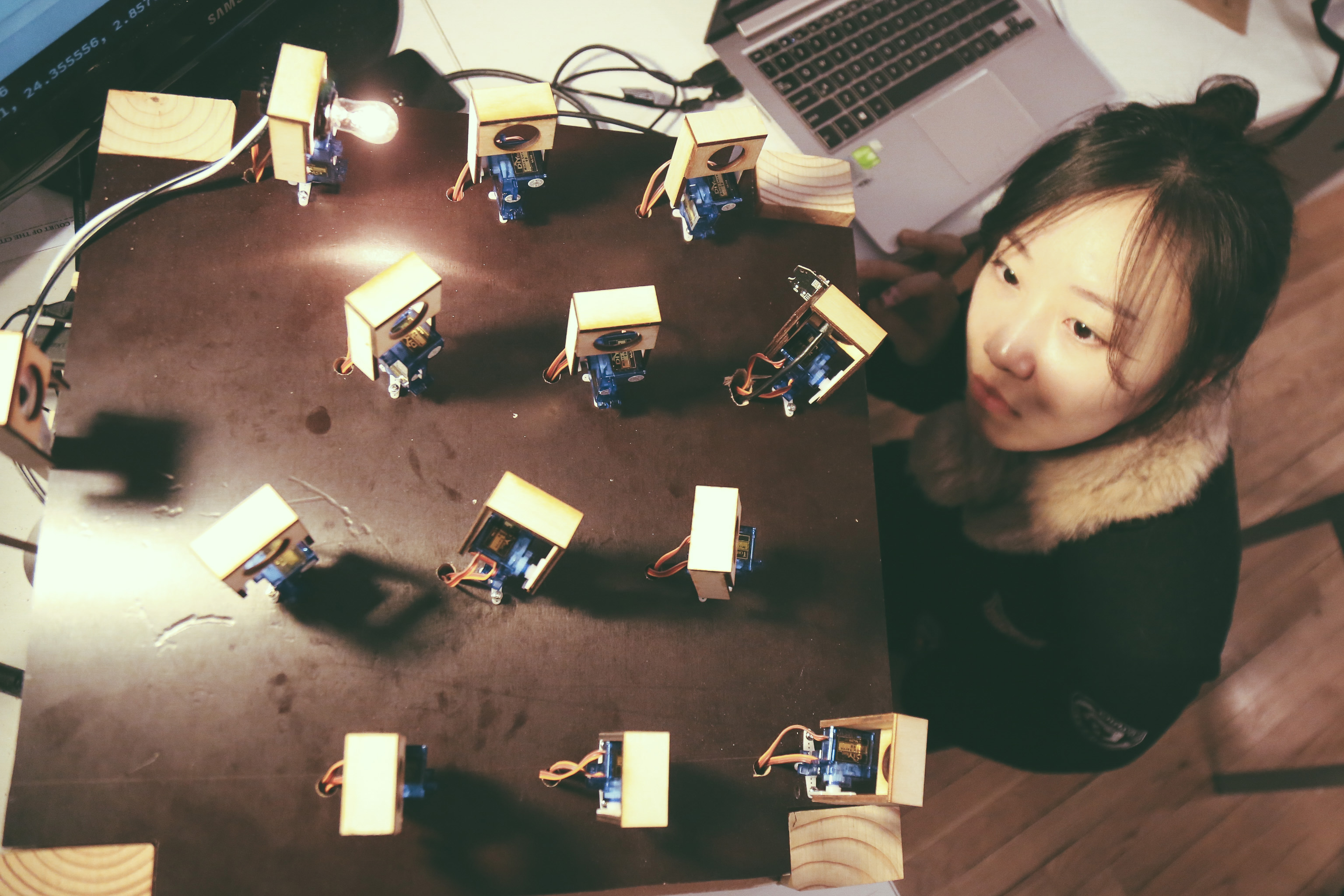

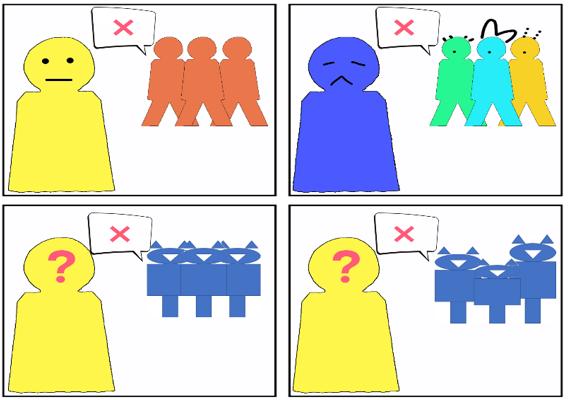

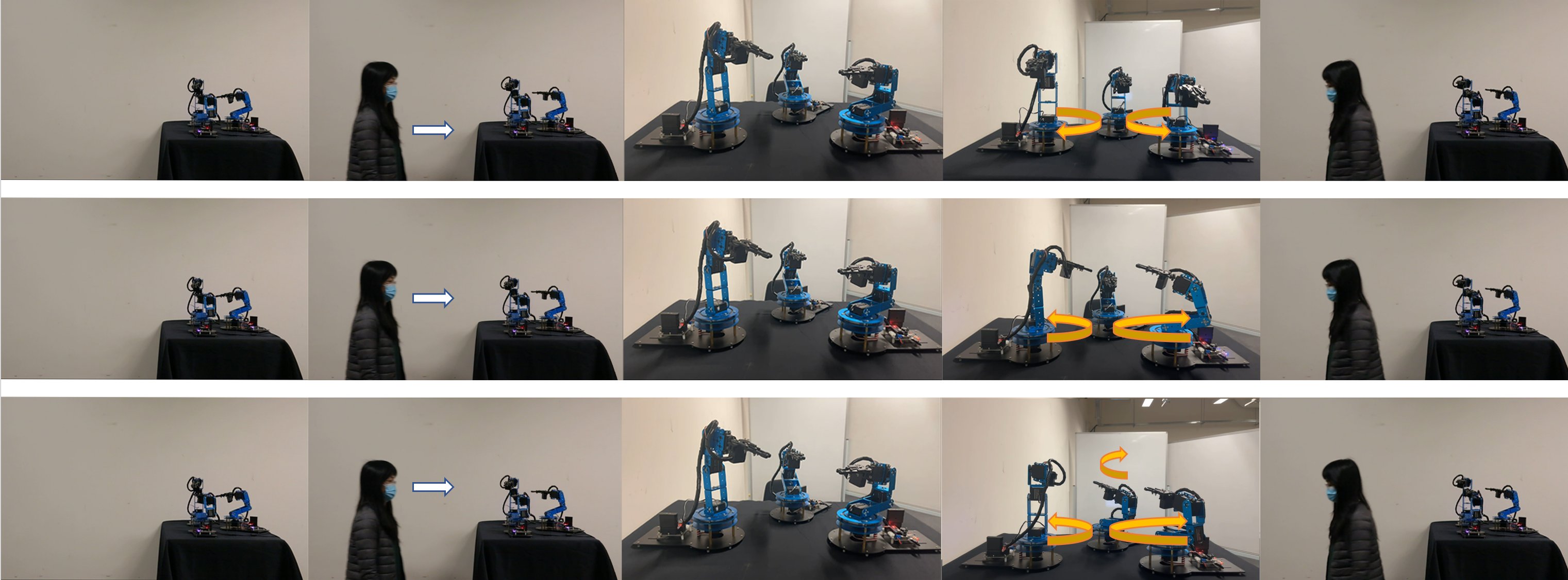

The way we interact with more ubiquitous robots in the future will involve human-like emotional interactions. For example, what happens if we interrupt a robot at work, and the robot stares at us? Our work investigates how groups of social robots in the environment react in human-robot collaborative contexts. In one example recent work for HRI, we investigated the cohesiveness of robot groups, termed entitativity, and how it affects humans' willingness to engage with the group and alter their perception of threat and cooperativity. To see how group composition affects how people perceive negative social intent from robots, we showed subjects videos of ways that humans are socially rejected by robots under high and low entitativity conditions. The results reveal that when robotic groups are have less entitativity (cohesiveness), the sense of rejection is greater, showing that humans experience increased anxiety over being rejected by more diverse groups of agents. We seek to understand how the social consequences of robot group dynamics are perceived, and hence avoid unanticipated conflict and negative perception of robot responses.

For more information, please visit https://recfro.github.io/pubs/

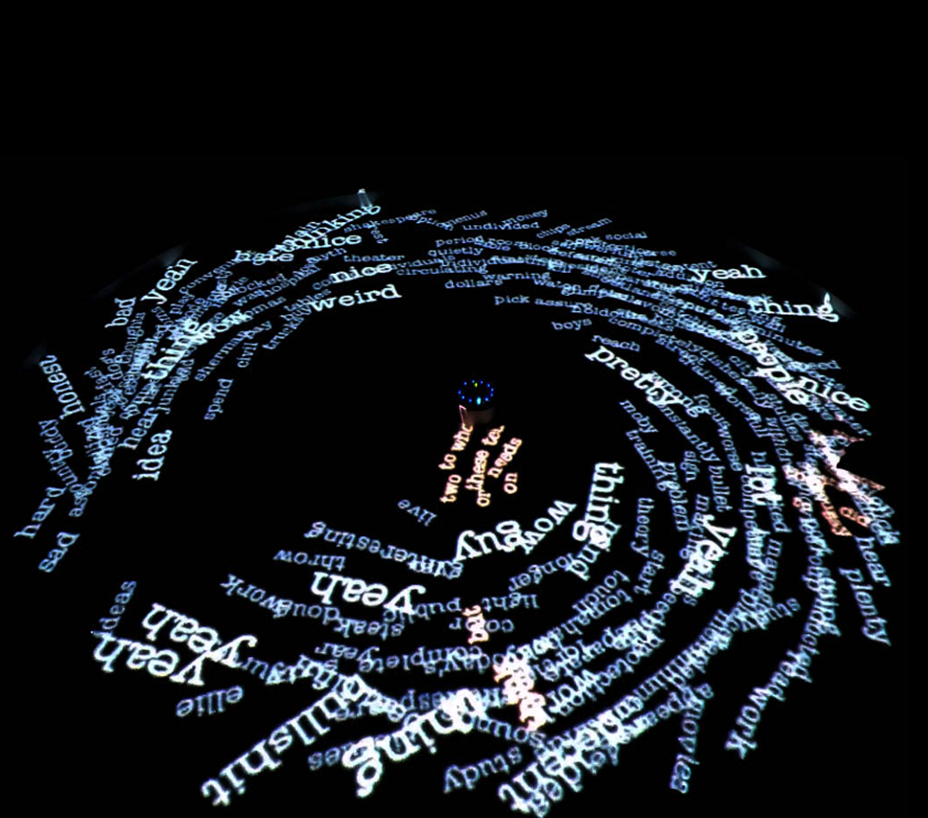

An interactive installation inviting the public to discuss around a table. As soon as a word is uttered, it begins a life of its own over the surface, desperate to join semantically close friends.

More details: https://augmentedmaterialitylab.org/2021/08/31/viva-voce/

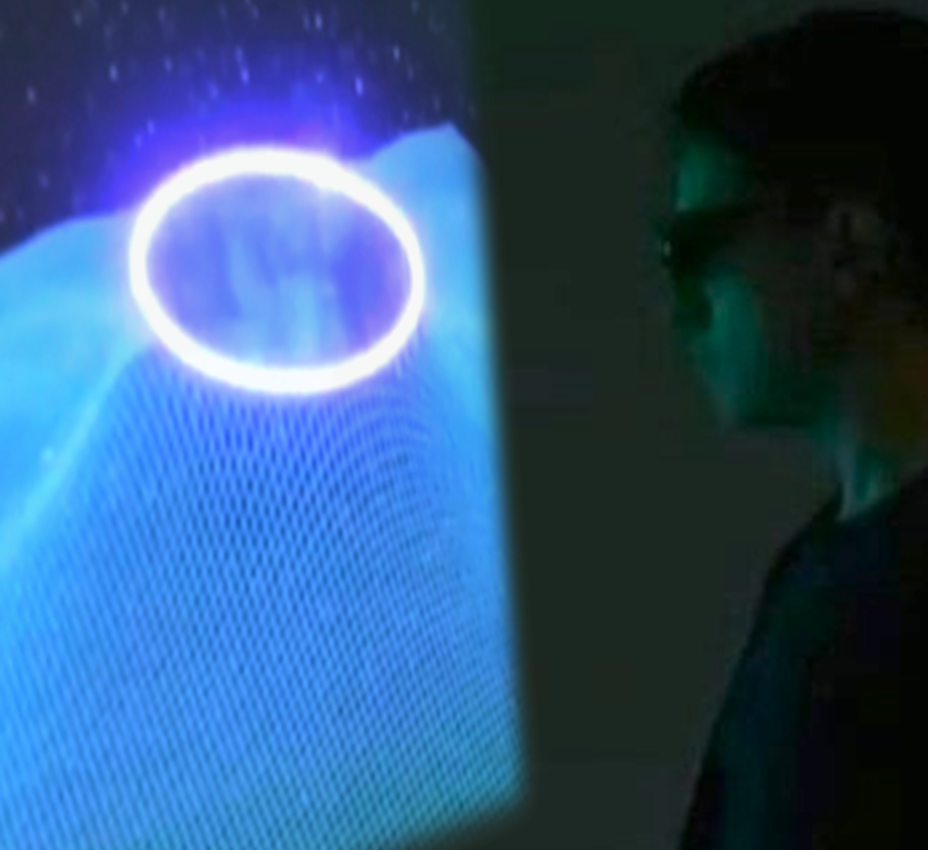

An interactive installation exploring the relationship between shape, geometry, resonance and energy.

More details: https://augmentedmaterialitylab.org/2022/06/03/cymatic-ground-2022/

Integrating and synchronising 3D stereoscopic laser-based vector graphics with conventional stereoscopic video projection systems.

More details: https://augmentedmaterialitylab.org/2021/08/18/stereoscopic-laser-graphics/

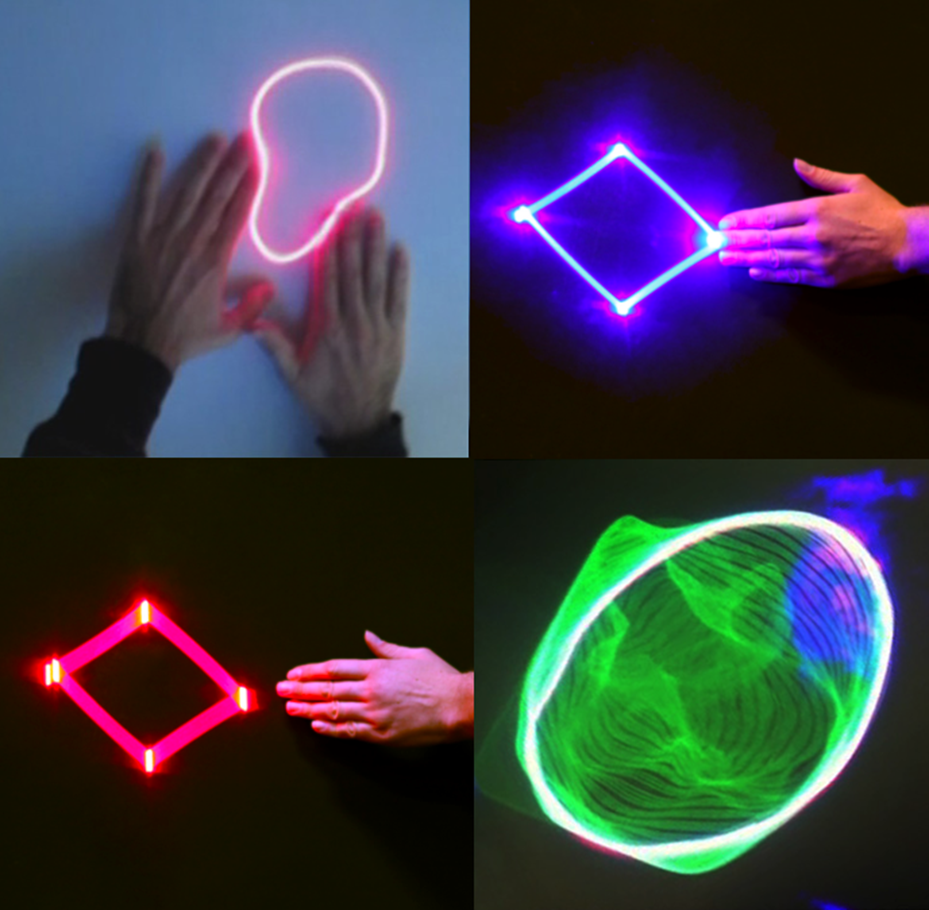

An interactive laser-based projection system capable of sensing touch at a distance without the use of external cameras.

More details: https://augmentedmaterialitylab.org/2021/08/31/laser-sensing-display/

A modular multi-camera system for remote teaching providing students with multi-perspective AI-curated content.

More details: https://augmentedmaterialitylab.org/2021/08/01/reverse-panopticon/