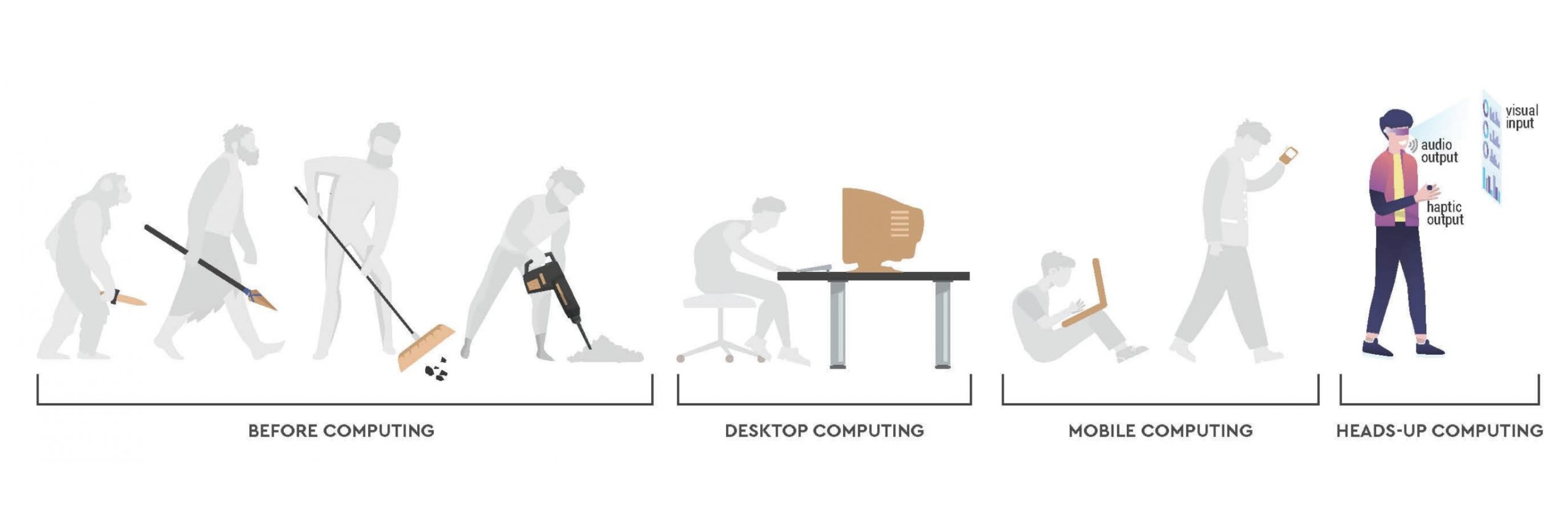

Our project aims at developing a wearable device platform that significantly diverges from traditional screen-based interactions. By leveraging voice and gesture commands, the project seeks to create a more natural and intuitive user experience that allows individuals to remain engaged with their physical surroundings while accessing and interacting with digital information. This approach promises to facilitate a more seamless, just-in-time, and intelligent computing support system that can enhance productivity, safety, and overall quality of life without the cognitive and social drawbacks associated with current mobile technologies.

Key areas of focus for the project include:

- Hardware Design: The development of lightweight, comfortable, and aesthetically pleasing wearable devices capable of supporting advanced computing functionalities. This involves integrating sensors and input/output mechanisms that are conducive to voice and gesture-based interactions, ensuring that the technology is both accessible and practical for everyday use.

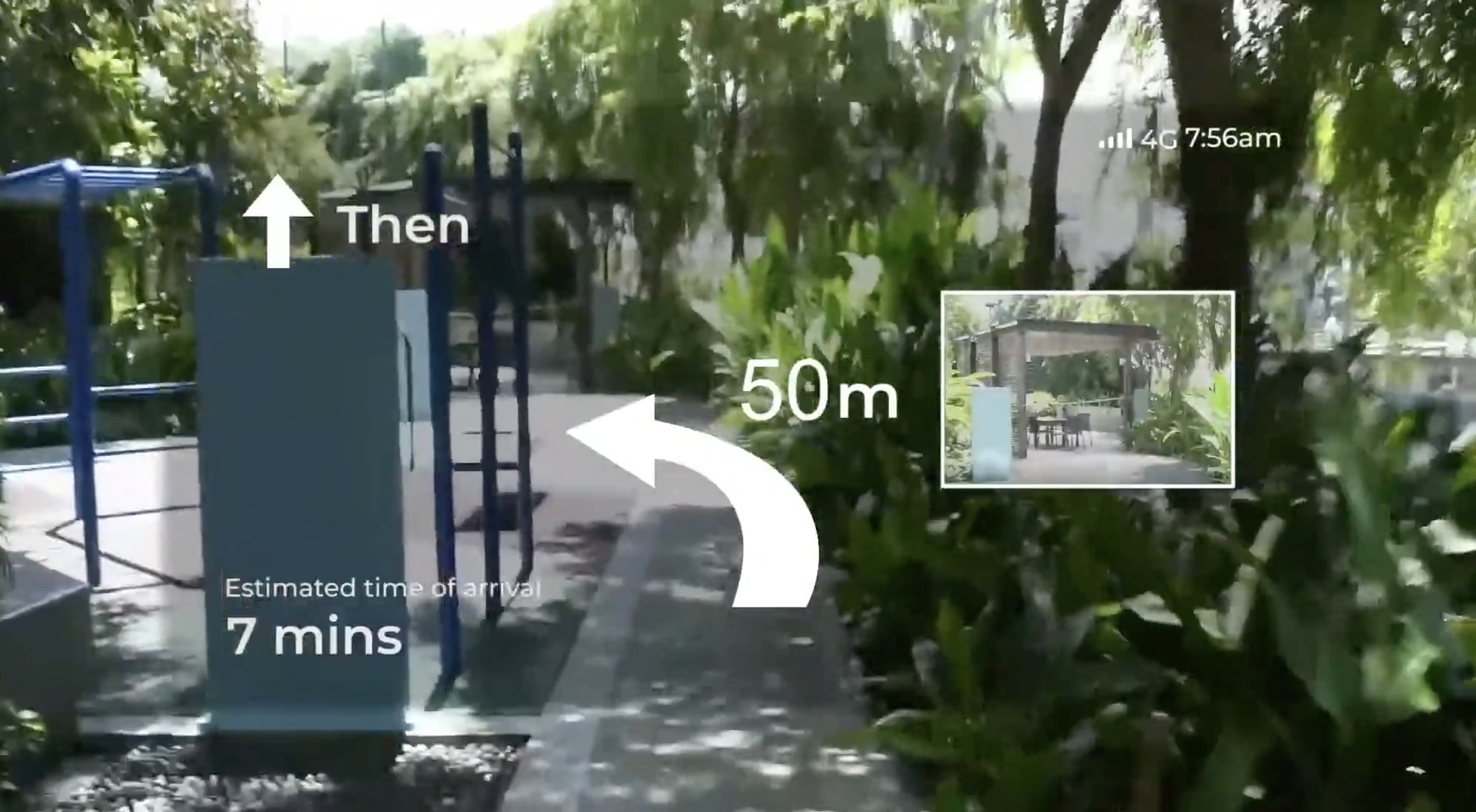

- Interaction Models: The creation of innovative interaction paradigms that can accurately interpret and respond to natural human behaviors. This includes refining voice recognition and gesture detection algorithms to understand a wide range of commands and gestures, thereby allowing for a more fluid and flexible user experience. We also investigate the use of multiple modes of interaction to provide a more robust and adaptable computing experience. By offering a variety of input and output options, the system can cater to diverse user preferences and situational requirements, ensuring that the technology remains effective across different contexts and use cases.

- Integration Methodologies: Exploring strategies to seamlessly blend computing into the user's sensory experience. This entails designing context-aware systems that can intelligently adjust to the user's environment and activities, providing relevant information and support without unnecessary interruptions or distractions. The project aims to achieve a balance between digital connectivity and real-world presence, enabling users to access digital resources in a manner that feels like an extension of their natural senses.

The ultimate goal of the "Heads-Up Computing" project is to pioneer a new era of mobile computing that enhances human capabilities and interactions without the downsides of screen dependency. Through innovative hardware design, intuitive interaction models, and intelligent integration methodologies, the project seeks to create a future where technology serves to augment human experiences in a way that is both empowering and harmonious with the physical world.